Vertica MPP Database Overview and TPC-DS Benchmark Performance Analysis (Part 4)

Testing Continued…

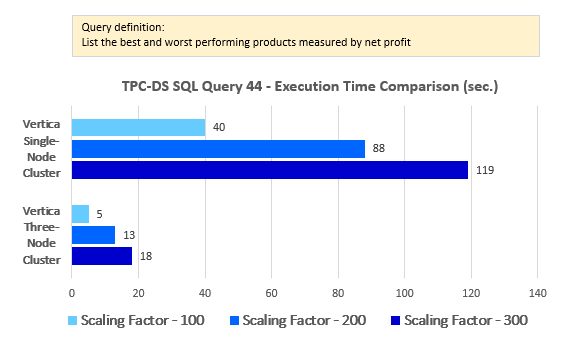

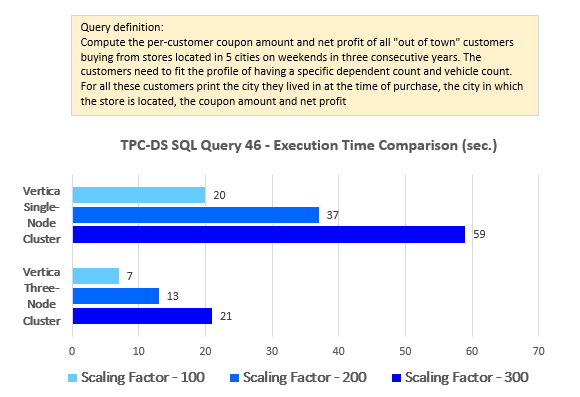

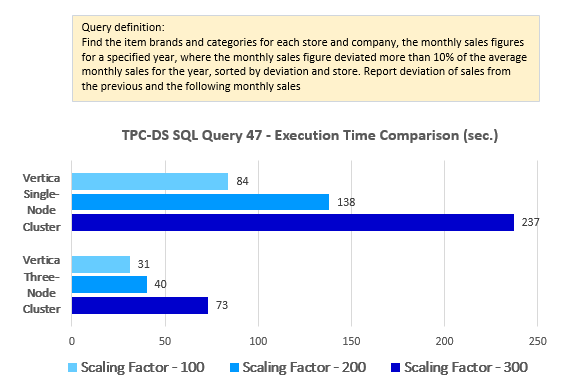

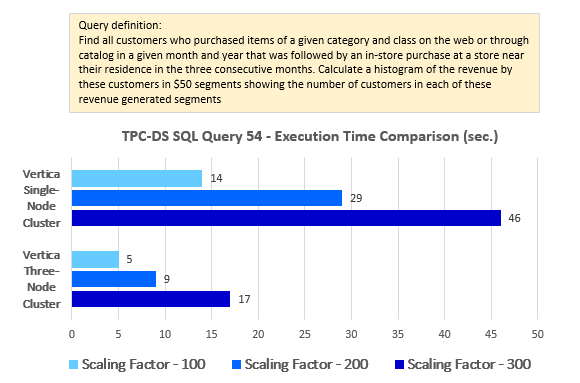

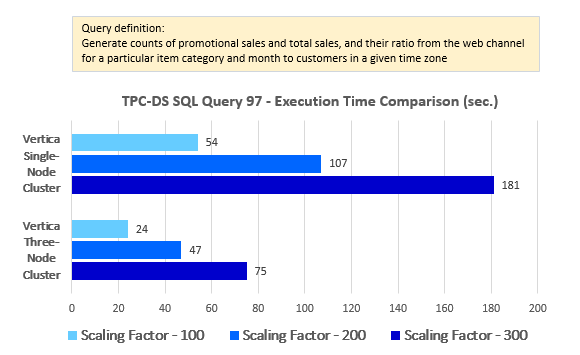

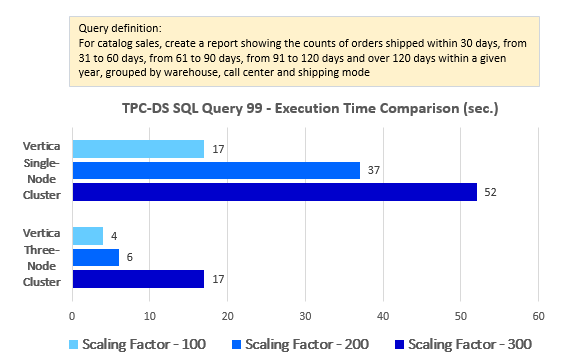

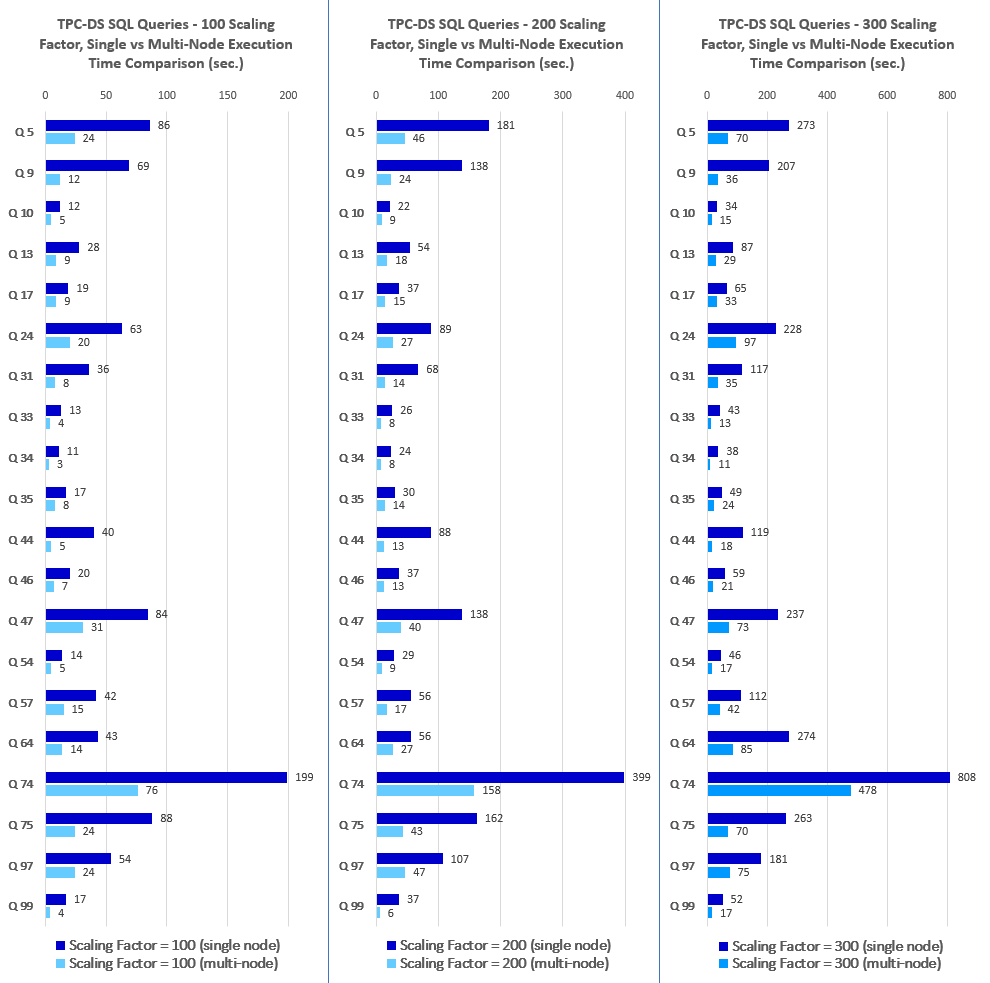

In the previous post I started looking at some of the TPC-DS queries’ performance across a single node and multi-node environments. This post continues with the theme of performance analysis across the remaining set of queries and looks at high-level interactions between Vertica and Tableau/PowerBI. Firstly though, let’s look at the remaining ten queries and how their execution times fared in the context of three different data and cluster sizes.

--Query 44

SELECT asceding.rnk,

i1.i_product_name best_performing,

i2.i_product_name worst_performing

FROM (SELECT *

FROM (SELECT item_sk,

Rank()

OVER (

ORDER BY rank_col ASC) rnk

FROM (SELECT ss_item_sk item_sk,

Avg(ss_net_profit) rank_col

FROM tpc_ds.store_sales ss1

WHERE ss_store_sk = 4

GROUP BY ss_item_sk

HAVING Avg(ss_net_profit) > 0.9 *

(SELECT Avg(ss_net_profit)

rank_col

FROM tpc_ds.store_sales

WHERE ss_store_sk = 4

AND ss_cdemo_sk IS

NULL

GROUP BY ss_store_sk))V1)

V11

WHERE rnk < 11) asceding,

(SELECT *

FROM (SELECT item_sk,

Rank()

OVER (

ORDER BY rank_col DESC) rnk

FROM (SELECT ss_item_sk item_sk,

Avg(ss_net_profit) rank_col

FROM tpc_ds.store_sales ss1

WHERE ss_store_sk = 4

GROUP BY ss_item_sk

HAVING Avg(ss_net_profit) > 0.9 *

(SELECT Avg(ss_net_profit)

rank_col

FROM tpc_ds.store_sales

WHERE ss_store_sk = 4

AND ss_cdemo_sk IS

NULL

GROUP BY ss_store_sk))V2)

V21

WHERE rnk < 11) descending,

tpc_ds.item i1,

tpc_ds.item i2

WHERE asceding.rnk = descending.rnk

AND i1.i_item_sk = asceding.item_sk

AND i2.i_item_sk = descending.item_sk

ORDER BY asceding.rnk

LIMIT 100;

--Query 46

SELECT c_last_name,

c_first_name,

ca_city,

bought_city,

ss_ticket_number,

amt,

profit

FROM (SELECT ss_ticket_number,

ss_customer_sk,

ca_city bought_city,

Sum(ss_coupon_amt) amt,

Sum(ss_net_profit) profit

FROM tpc_ds.store_sales,

tpc_ds.date_dim,

tpc_ds.store,

tpc_ds.household_demographics,

tpc_ds.customer_address

WHERE store_sales.ss_sold_date_sk = date_dim.d_date_sk

AND store_sales.ss_store_sk = store.s_store_sk

AND store_sales.ss_hdemo_sk = household_demographics.hd_demo_sk

AND store_sales.ss_addr_sk = customer_address.ca_address_sk

AND ( household_demographics.hd_dep_count = 6

OR household_demographics.hd_vehicle_count = 0 )

AND date_dim.d_dow IN ( 6, 0 )

AND date_dim.d_year IN ( 2000, 2000 + 1, 2000 + 2 )

AND store.s_city IN ( 'Midway', 'Fairview', 'Fairview',

'Fairview',

'Fairview' )

GROUP BY ss_ticket_number,

ss_customer_sk,

ss_addr_sk,

ca_city) dn,

tpc_ds.customer,

tpc_ds.customer_address current_addr

WHERE ss_customer_sk = c_customer_sk

AND customer.c_current_addr_sk = current_addr.ca_address_sk

AND current_addr.ca_city <> bought_city

ORDER BY c_last_name,

c_first_name,

ca_city,

bought_city,

ss_ticket_number

LIMIT 100;

--Query 47

WITH v1

AS (SELECT i_category,

i_brand,

s_store_name,

s_company_name,

d_year,

d_moy,

Sum(ss_sales_price) sum_sales,

Avg(Sum(ss_sales_price))

OVER (

partition BY i_category, i_brand, s_store_name,

s_company_name,

d_year)

avg_monthly_sales,

Rank()

OVER (

partition BY i_category, i_brand, s_store_name,

s_company_name

ORDER BY d_year, d_moy) rn

FROM tpc_ds.item,

tpc_ds.store_sales,

tpc_ds.date_dim,

tpc_ds.store

WHERE ss_item_sk = i_item_sk

AND ss_sold_date_sk = d_date_sk

AND ss_store_sk = s_store_sk

AND ( d_year = 1999

OR ( d_year = 1999 - 1

AND d_moy = 12 )

OR ( d_year = 1999 + 1

AND d_moy = 1 ) )

GROUP BY i_category,

i_brand,

s_store_name,

s_company_name,

d_year,

d_moy),

v2

AS (SELECT v1.i_category,

v1.d_year,

v1.d_moy,

v1.avg_monthly_sales,

v1.sum_sales,

v1_lag.sum_sales psum,

v1_lead.sum_sales nsum

FROM v1,

v1 v1_lag,

v1 v1_lead

WHERE v1.i_category = v1_lag.i_category

AND v1.i_category = v1_lead.i_category

AND v1.i_brand = v1_lag.i_brand

AND v1.i_brand = v1_lead.i_brand

AND v1.s_store_name = v1_lag.s_store_name

AND v1.s_store_name = v1_lead.s_store_name

AND v1.s_company_name = v1_lag.s_company_name

AND v1.s_company_name = v1_lead.s_company_name

AND v1.rn = v1_lag.rn + 1

AND v1.rn = v1_lead.rn - 1)

SELECT *

FROM v2

WHERE d_year = 1999

AND avg_monthly_sales > 0

AND CASE

WHEN avg_monthly_sales > 0 THEN Abs(sum_sales - avg_monthly_sales)

/

avg_monthly_sales

ELSE NULL

END > 0.1

ORDER BY sum_sales - avg_monthly_sales,

3

LIMIT 100;

--Query 54

WITH my_customers

AS (SELECT DISTINCT c_customer_sk,

c_current_addr_sk

FROM (SELECT cs_sold_date_sk sold_date_sk,

cs_bill_customer_sk customer_sk,

cs_item_sk item_sk

FROM tpc_ds.catalog_sales

UNION ALL

SELECT ws_sold_date_sk sold_date_sk,

ws_bill_customer_sk customer_sk,

ws_item_sk item_sk

FROM tpc_ds.web_sales) cs_or_ws_sales,

tpc_ds.item,

tpc_ds.date_dim,

tpc_ds.customer

WHERE sold_date_sk = d_date_sk

AND item_sk = i_item_sk

AND i_category = 'Sports'

AND i_class = 'fitness'

AND c_customer_sk = cs_or_ws_sales.customer_sk

AND d_moy = 5

AND d_year = 2000),

my_revenue

AS (SELECT c_customer_sk,

Sum(ss_ext_sales_price) AS revenue

FROM my_customers,

tpc_ds.store_sales,

tpc_ds.customer_address,

tpc_ds.store,

tpc_ds.date_dim

WHERE c_current_addr_sk = ca_address_sk

AND ca_county = s_county

AND ca_state = s_state

AND ss_sold_date_sk = d_date_sk

AND c_customer_sk = ss_customer_sk

AND d_month_seq BETWEEN (SELECT DISTINCT d_month_seq + 1

FROM tpc_ds.date_dim

WHERE d_year = 2000

AND d_moy = 5) AND

(SELECT DISTINCT

d_month_seq + 3

FROM tpc_ds.date_dim

WHERE d_year = 2000

AND d_moy = 5)

GROUP BY c_customer_sk),

segments

AS (SELECT Cast(( revenue / 50 ) AS INT) AS segment

FROM my_revenue)

SELECT segment,

Count(*) AS num_customers,

segment * 50 AS segment_base

FROM segments

GROUP BY segment

ORDER BY segment,

num_customers

LIMIT 100;

--Query 57

WITH v1

AS (SELECT i_category,

i_brand,

cc_name,

d_year,

d_moy,

Sum(cs_sales_price) sum_sales

,

Avg(Sum(cs_sales_price))

OVER (

partition BY i_category, i_brand, cc_name, d_year)

avg_monthly_sales

,

Rank()

OVER (

partition BY i_category, i_brand, cc_name

ORDER BY d_year, d_moy) rn

FROM tpc_ds.item,

tpc_ds.catalog_sales,

tpc_ds.date_dim,

tpc_ds.call_center

WHERE cs_item_sk = i_item_sk

AND cs_sold_date_sk = d_date_sk

AND cc_call_center_sk = cs_call_center_sk

AND ( d_year = 2000

OR ( d_year = 2000 - 1

AND d_moy = 12 )

OR ( d_year = 2000 + 1

AND d_moy = 1 ) )

GROUP BY i_category,

i_brand,

cc_name,

d_year,

d_moy),

v2

AS (SELECT v1.i_brand,

v1.d_year,

v1.avg_monthly_sales,

v1.sum_sales,

v1_lag.sum_sales psum,

v1_lead.sum_sales nsum

FROM v1,

v1 v1_lag,

v1 v1_lead

WHERE v1.i_category = v1_lag.i_category

AND v1.i_category = v1_lead.i_category

AND v1.i_brand = v1_lag.i_brand

AND v1.i_brand = v1_lead.i_brand

AND v1. cc_name = v1_lag. cc_name

AND v1. cc_name = v1_lead. cc_name

AND v1.rn = v1_lag.rn + 1

AND v1.rn = v1_lead.rn - 1)

SELECT *

FROM v2

WHERE d_year = 2000

AND avg_monthly_sales > 0

AND CASE

WHEN avg_monthly_sales > 0 THEN Abs(sum_sales - avg_monthly_sales)

/

avg_monthly_sales

ELSE NULL

END > 0.1

ORDER BY sum_sales - avg_monthly_sales,

3

LIMIT 100;

--Query 64

WITH cs_ui

AS (SELECT cs_item_sk,

Sum(cs_ext_list_price) AS sale,

Sum(cr_refunded_cash + cr_reversed_charge

+ cr_store_credit) AS refund

FROM tpc_ds.catalog_sales,

tpc_ds.catalog_returns

WHERE cs_item_sk = cr_item_sk

AND cs_order_number = cr_order_number

GROUP BY cs_item_sk

HAVING Sum(cs_ext_list_price) > 2 * Sum(

cr_refunded_cash + cr_reversed_charge

+ cr_store_credit)),

cross_sales

AS (SELECT i_product_name product_name,

i_item_sk item_sk,

s_store_name store_name,

s_zip store_zip,

ad1.ca_street_number b_street_number,

ad1.ca_street_name b_streen_name,

ad1.ca_city b_city,

ad1.ca_zip b_zip,

ad2.ca_street_number c_street_number,

ad2.ca_street_name c_street_name,

ad2.ca_city c_city,

ad2.ca_zip c_zip,

d1.d_year AS syear,

d2.d_year AS fsyear,

d3.d_year s2year,

Count(*) cnt,

Sum(ss_wholesale_cost) s1,

Sum(ss_list_price) s2,

Sum(ss_coupon_amt) s3

FROM tpc_ds.store_sales,

tpc_ds.store_returns,

cs_ui,

tpc_ds.date_dim d1,

tpc_ds.date_dim d2,

tpc_ds.date_dim d3,

tpc_ds.store,

tpc_ds.customer,

tpc_ds.customer_demographics cd1,

tpc_ds.customer_demographics cd2,

tpc_ds.promotion,

tpc_ds.household_demographics hd1,

tpc_ds.household_demographics hd2,

tpc_ds.customer_address ad1,

tpc_ds.customer_address ad2,

tpc_ds.income_band ib1,

tpc_ds.income_band ib2,

tpc_ds.item

WHERE ss_store_sk = s_store_sk

AND ss_sold_date_sk = d1.d_date_sk

AND ss_customer_sk = c_customer_sk

AND ss_cdemo_sk = cd1.cd_demo_sk

AND ss_hdemo_sk = hd1.hd_demo_sk

AND ss_addr_sk = ad1.ca_address_sk

AND ss_item_sk = i_item_sk

AND ss_item_sk = sr_item_sk

AND ss_ticket_number = sr_ticket_number

AND ss_item_sk = cs_ui.cs_item_sk

AND c_current_cdemo_sk = cd2.cd_demo_sk

AND c_current_hdemo_sk = hd2.hd_demo_sk

AND c_current_addr_sk = ad2.ca_address_sk

AND c_first_sales_date_sk = d2.d_date_sk

AND c_first_shipto_date_sk = d3.d_date_sk

AND ss_promo_sk = p_promo_sk

AND hd1.hd_income_band_sk = ib1.ib_income_band_sk

AND hd2.hd_income_band_sk = ib2.ib_income_band_sk

AND cd1.cd_marital_status <> cd2.cd_marital_status

AND i_color IN ( 'cyan', 'peach', 'blush', 'frosted',

'powder', 'orange' )

AND i_current_price BETWEEN 58 AND 58 + 10

AND i_current_price BETWEEN 58 + 1 AND 58 + 15

GROUP BY i_product_name,

i_item_sk,

s_store_name,

s_zip,

ad1.ca_street_number,

ad1.ca_street_name,

ad1.ca_city,

ad1.ca_zip,

ad2.ca_street_number,

ad2.ca_street_name,

ad2.ca_city,

ad2.ca_zip,

d1.d_year,

d2.d_year,

d3.d_year)

SELECT cs1.product_name,

cs1.store_name,

cs1.store_zip,

cs1.b_street_number,

cs1.b_streen_name,

cs1.b_city,

cs1.b_zip,

cs1.c_street_number,

cs1.c_street_name,

cs1.c_city,

cs1.c_zip,

cs1.syear,

cs1.cnt,

cs1.s1,

cs1.s2,

cs1.s3,

cs2.s1,

cs2.s2,

cs2.s3,

cs2.syear,

cs2.cnt

FROM cross_sales cs1,

cross_sales cs2

WHERE cs1.item_sk = cs2.item_sk

AND cs1.syear = 2001

AND cs2.syear = 2001 + 1

AND cs2.cnt <= cs1.cnt

AND cs1.store_name = cs2.store_name

AND cs1.store_zip = cs2.store_zip

ORDER BY cs1.product_name,

cs1.store_name,

cs2.cnt;

--Query 74

WITH year_total

AS (SELECT c_customer_id customer_id,

c_first_name customer_first_name,

c_last_name customer_last_name,

d_year AS year1,

Sum(ss_net_paid) year_total,

's' sale_type

FROM tpc_ds.customer,

tpc_ds.store_sales,

tpc_ds.date_dim

WHERE c_customer_sk = ss_customer_sk

AND ss_sold_date_sk = d_date_sk

AND d_year IN ( 1999, 1999 + 1 )

GROUP BY c_customer_id,

c_first_name,

c_last_name,

d_year

UNION ALL

SELECT c_customer_id customer_id,

c_first_name customer_first_name,

c_last_name customer_last_name,

d_year AS year1,

Sum(ws_net_paid) year_total,

'w' sale_type

FROM tpc_ds.customer,

tpc_ds.web_sales,

tpc_ds.date_dim

WHERE c_customer_sk = ws_bill_customer_sk

AND ws_sold_date_sk = d_date_sk

AND d_year IN ( 1999, 1999 + 1 )

GROUP BY c_customer_id,

c_first_name,

c_last_name,

d_year)

SELECT t_s_secyear.customer_id,

t_s_secyear.customer_first_name,

t_s_secyear.customer_last_name

FROM year_total t_s_firstyear,

year_total t_s_secyear,

year_total t_w_firstyear,

year_total t_w_secyear

WHERE t_s_secyear.customer_id = t_s_firstyear.customer_id

AND t_s_firstyear.customer_id = t_w_secyear.customer_id

AND t_s_firstyear.customer_id = t_w_firstyear.customer_id

AND t_s_firstyear.sale_type = 's'

AND t_w_firstyear.sale_type = 'w'

AND t_s_secyear.sale_type = 's'

AND t_w_secyear.sale_type = 'w'

AND t_s_firstyear.year1 = 1999

AND t_s_secyear.year1 = 1999 + 1

AND t_w_firstyear.year1 = 1999

AND t_w_secyear.year1 = 1999 + 1

AND t_s_firstyear.year_total > 0

AND t_w_firstyear.year_total > 0

AND CASE

WHEN t_w_firstyear.year_total > 0 THEN t_w_secyear.year_total /

t_w_firstyear.year_total

ELSE NULL

END > CASE

WHEN t_s_firstyear.year_total > 0 THEN

t_s_secyear.year_total /

t_s_firstyear.year_total

ELSE NULL

END

ORDER BY 1,

2,

3

LIMIT 100;

--Query 75

WITH all_sales

AS (SELECT d_year,

i_brand_id,

i_class_id,

i_category_id,

i_manufact_id,

Sum(sales_cnt) AS sales_cnt,

Sum(sales_amt) AS sales_amt

FROM (SELECT d_year,

i_brand_id,

i_class_id,

i_category_id,

i_manufact_id,

cs_quantity - COALESCE(cr_return_quantity, 0) AS

sales_cnt,

cs_ext_sales_price - COALESCE(cr_return_amount, 0.0) AS

sales_amt

FROM tpc_ds.catalog_sales

JOIN tpc_ds.item

ON i_item_sk = cs_item_sk

JOIN tpc_ds.date_dim

ON d_date_sk = cs_sold_date_sk

LEFT JOIN tpc_ds.catalog_returns

ON ( cs_order_number = cr_order_number

AND cs_item_sk = cr_item_sk )

WHERE i_category = 'Men'

UNION

SELECT d_year,

i_brand_id,

i_class_id,

i_category_id,

i_manufact_id,

ss_quantity - COALESCE(sr_return_quantity, 0) AS

sales_cnt,

ss_ext_sales_price - COALESCE(sr_return_amt, 0.0) AS

sales_amt

FROM tpc_ds.store_sales

JOIN tpc_ds.item

ON i_item_sk = ss_item_sk

JOIN tpc_ds.date_dim

ON d_date_sk = ss_sold_date_sk

LEFT JOIN tpc_ds.store_returns

ON ( ss_ticket_number = sr_ticket_number

AND ss_item_sk = sr_item_sk )

WHERE i_category = 'Men'

UNION

SELECT d_year,

i_brand_id,

i_class_id,

i_category_id,

i_manufact_id,

ws_quantity - COALESCE(wr_return_quantity, 0) AS

sales_cnt,

ws_ext_sales_price - COALESCE(wr_return_amt, 0.0) AS

sales_amt

FROM tpc_ds.web_sales

JOIN tpc_ds.item

ON i_item_sk = ws_item_sk

JOIN tpc_ds.date_dim

ON d_date_sk = ws_sold_date_sk

LEFT JOIN tpc_ds.web_returns

ON ( ws_order_number = wr_order_number

AND ws_item_sk = wr_item_sk )

WHERE i_category = 'Men') sales_detail

GROUP BY d_year,

i_brand_id,

i_class_id,

i_category_id,

i_manufact_id)

SELECT prev_yr.d_year AS prev_year,

curr_yr.d_year AS year1,

curr_yr.i_brand_id,

curr_yr.i_class_id,

curr_yr.i_category_id,

curr_yr.i_manufact_id,

prev_yr.sales_cnt AS prev_yr_cnt,

curr_yr.sales_cnt AS curr_yr_cnt,

curr_yr.sales_cnt - prev_yr.sales_cnt AS sales_cnt_diff,

curr_yr.sales_amt - prev_yr.sales_amt AS sales_amt_diff

FROM all_sales curr_yr,

all_sales prev_yr

WHERE curr_yr.i_brand_id = prev_yr.i_brand_id

AND curr_yr.i_class_id = prev_yr.i_class_id

AND curr_yr.i_category_id = prev_yr.i_category_id

AND curr_yr.i_manufact_id = prev_yr.i_manufact_id

AND curr_yr.d_year = 2002

AND prev_yr.d_year = 2002 - 1

AND Cast(curr_yr.sales_cnt AS DECIMAL(17, 2)) / Cast(prev_yr.sales_cnt AS

DECIMAL(17, 2))

< 0.9

ORDER BY sales_cnt_diff

LIMIT 100;

--Query 97

WITH ssci

AS (SELECT ss_customer_sk customer_sk,

ss_item_sk item_sk

FROM tpc_ds.store_sales,

tpc_ds.date_dim

WHERE ss_sold_date_sk = d_date_sk

AND d_month_seq BETWEEN 1196 AND 1196 + 11

GROUP BY ss_customer_sk,

ss_item_sk),

csci

AS (SELECT cs_bill_customer_sk customer_sk,

cs_item_sk item_sk

FROM tpc_ds.catalog_sales,

tpc_ds.date_dim

WHERE cs_sold_date_sk = d_date_sk

AND d_month_seq BETWEEN 1196 AND 1196 + 11

GROUP BY cs_bill_customer_sk,

cs_item_sk)

SELECT Sum(CASE

WHEN ssci.customer_sk IS NOT NULL

AND csci.customer_sk IS NULL THEN 1

ELSE 0

END) store_only,

Sum(CASE

WHEN ssci.customer_sk IS NULL

AND csci.customer_sk IS NOT NULL THEN 1

ELSE 0

END) catalog_only,

Sum(CASE

WHEN ssci.customer_sk IS NOT NULL

AND csci.customer_sk IS NOT NULL THEN 1

ELSE 0

END) store_and_catalog

FROM ssci

FULL OUTER JOIN csci

ON ( ssci.customer_sk = csci.customer_sk

AND ssci.item_sk = csci.item_sk )

LIMIT 100;

--Query 99

SELECT Substr(w_warehouse_name, 1, 20),

sm_type,

cc_name,

Sum(CASE

WHEN ( cs_ship_date_sk - cs_sold_date_sk <= 30 ) THEN 1

ELSE 0

END) AS '30 days',

Sum(CASE

WHEN ( cs_ship_date_sk - cs_sold_date_sk > 30 )

AND ( cs_ship_date_sk - cs_sold_date_sk <= 60 ) THEN 1

ELSE 0

END) AS '31-60 days',

Sum(CASE

WHEN ( cs_ship_date_sk - cs_sold_date_sk > 60 )

AND ( cs_ship_date_sk - cs_sold_date_sk <= 90 ) THEN 1

ELSE 0

END) AS '61-90 days',

Sum(CASE

WHEN ( cs_ship_date_sk - cs_sold_date_sk > 90 )

AND ( cs_ship_date_sk - cs_sold_date_sk <= 120 ) THEN

1

ELSE 0

END) AS '91-120 days',

Sum(CASE

WHEN ( cs_ship_date_sk - cs_sold_date_sk > 120 ) THEN 1

ELSE 0

END) AS '>120 days'

FROM tpc_ds.catalog_sales,

tpc_ds.warehouse,

tpc_ds.ship_mode,

tpc_ds.call_center,

tpc_ds.date_dim

WHERE d_month_seq BETWEEN 1200 AND 1200 + 11

AND cs_ship_date_sk = d_date_sk

AND cs_warehouse_sk = w_warehouse_sk

AND cs_ship_mode_sk = sm_ship_mode_sk

AND cs_call_center_sk = cc_call_center_sk

GROUP BY Substr(w_warehouse_name, 1, 20),

sm_type,

cc_name

ORDER BY Substr(w_warehouse_name, 1, 20),

sm_type,

cc_name

LIMIT 100;

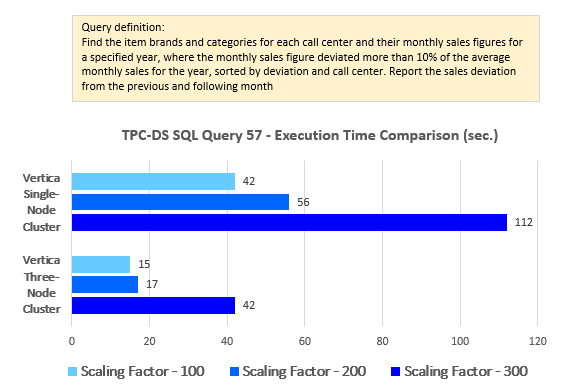

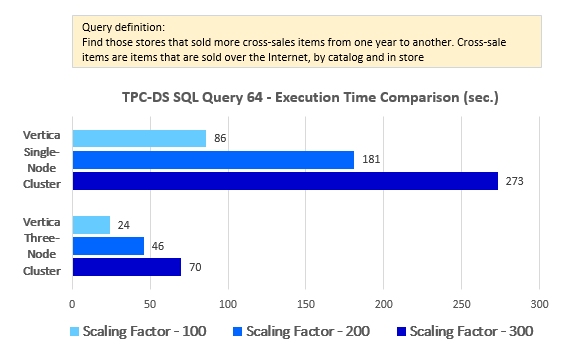

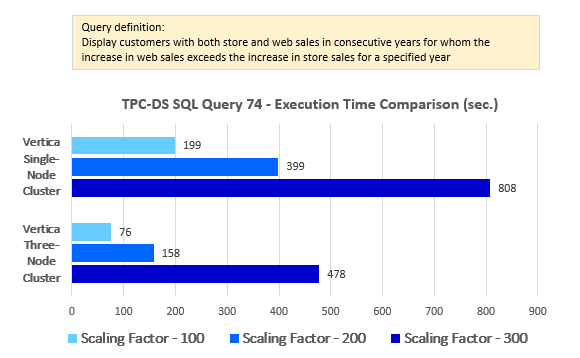

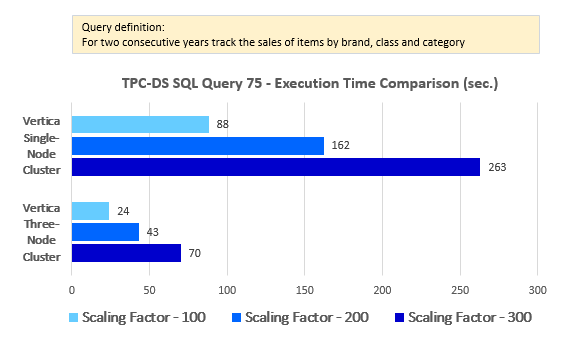

I didn’t set out on this experiment to compare Vertica’s performance to other vendors in the MPP category, so I cannot comment on how it could verse against other RDBMS systems. It would have been interesting to see how, for example, Pivotal’s Greenplum would match up when testing it out on the identical hardware and data volumes but this 4-part series was primarily focused on establishing the connection between the two variables: the number of nodes and data size. From that perspective, Vertica performs very well ‘out-of-the-box’, and without any tuning or tweaking it managed to not only execute the queries relatively fast (given the mediocre hardware specs) but also take full advantage of the extra computing resources to distribute the load across the available nodes and cut queries execution times.

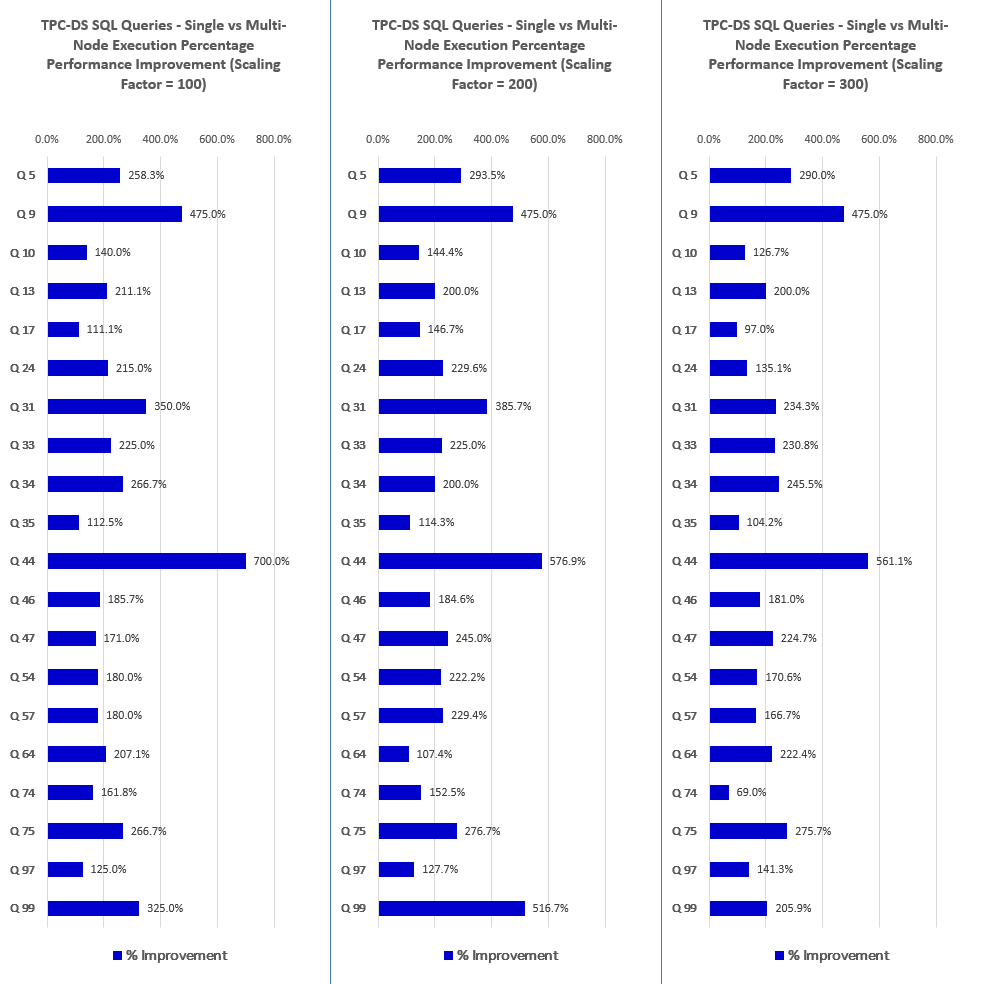

Looking at the results and how they present the dichotomies across the multitude of different configurations, the first thing that jumps out is the fact the differences between performance levels across various data volumes and node counts are very linear. On an average, the query execution speed doubles for every scaling factor increase and goes down by a factor of two for every node that’s removed. There are some slight variations but on the whole Vertica performance is consistent and predictable, and all twenty queries exhibiting similar pattern when dealing with more data and/or more computing power thrown at it (additional nodes).

Looking at the side-by-side comparison of the execution results across single and multi-node configurations the differences between across scaling factors of 100, 200 and 300 are very consistent (click on image to expand).

Converting these differences (single vs multi-node deployment) into percentage yielded an average of 243% increase for the scaling factor of 100, 252% increase for the scaling factor of 200 and 217% increase for the scaling factor of 300 as per the chart below (click on image to expand).

Finally, let’s look at how Vertica (three-node cluster with 100 scaling factor TPC-DS data) performed against some ad hoc generated queries in PowerBI and Tableau applications. Below are two short video clips depicting a rudimentary analysis of store sales data (this fact table contains close to 300 million records) against date, item, store and customer_address dimensions. It’s the type of rapid-fire, exploratory analysis one would conduct to analyse retail data to answer some immediate questions. I also set up Tableau and PowerBI side-by-side a process viewer/system monitor (htop), with four panes displaying core performance metrics e.g. CPU load, swap, memory status etc. across the three nodes and the machine hosting visualisation application (bottom view) so that I could observer (and record) systems’ behaviour when interactively aggregating data. In this way it was easy to see load distribution and how each host reacted (performance-wise) to queries issued based on this analysis. With respect to PowerBI, you can see that my three years old work laptop I recorded this on was not up to the task due to large amount of CPU cycles consumed by screen-recording software alone. On the flip side, Tableau run on my dual-CPU Mac Pro so the whole process was stutter-free and could reflect the fact that given access to a better hardware, PowerBI may also perform better.

I used Tableau Desktop version 2018.1 with native support for Vertica. As opposed to PowerBI, Tableau had no issues reading data from ‘customer’ dimension but in order to make those two footages as comparable as possible I abandoned it and used ‘customer_address’ instead. In terms of raw performance, this mini-cluster actually turned out to be borderline usable and in most cases I didn’t need to wait more than 10 seconds for aggregation or filtering to complete. Each node CPU spiked close to 100 percent during queries execution but given the volume of data and the mediocre hardware this data was crunched on, I would say that Vertica performed quite well.

PowerBI version 2.59 (used in the below footage) included Vertica support in Beta only so chances are the final release will be much better supported and optimised. PowerBI wasn’t too cooperative when selecting the data out of ‘customer’ dimension (see footage below) but everything else worked as expected e.g. geospatial analysis, various graphs etc. Performance-wise, I still think that Tableau was more workable but given the fact that Vertica support was still in preview and my pedestrian-quality laptop I was testing it on, chances are that PowerBI would be a viable choice for such exploratory analysis, especially when factoring in the price – free!

Conclusion

There isn’t much I can add to what I have already stated based on my observations and performance results. Vertica easy set-up and hassle-free, almost plug-in configuration has made it very easy to work with. While I haven’t covered most of its ‘knobs and switches’ that come built-it to fine-tune some of its functionality, the installation process was simple and more intuitive the some of the other commercial RDBMS products out there, even the ones which come with pre-built binaries and executable packages. I only wish Vertica come with the latest Ubuntu Server LTS version support as the time of writing this post Ubuntu Bionic Beaver was the latest LTS release while Vertica 9.1 Ubuntu support only extended to version 14, dating back to July 2016 and supported only until April 2019.

Performance-wise, Vertica exceeded my expectations. Running on hardware which I had no real use for and originally intended to sell on Ebay for a few hundred dollars (if lucky), it managed to crunch through large volumes of data (by relative standards) in a very respectable time. Some of the more processing-intensive queries executed in seconds and scaled well (linearly) across additional nodes and increased data volumes. It would be a treat to compare it against the likes of Greenplum or CitusDB and explore some of its other features e.g. Machine Learning or Hadoop integration (an idea for a future blog) as SQL queries execution speed on structured data isn’t its only forte.

http://scuttle.org/bookmarks.php/pass?action=addThis entry was posted on Monday, June 11th, 2018 at 12:48 pm and is filed under MPP RDBMS, SQL. You can follow any responses to this entry through the RSS 2.0 feed. You can leave a response, or trackback from your own site.

admin October 15th, 2018 at 11:10 pm

Thanks Dave…much appreciated.