SQL Server Data Synchronization Automation Using Tablediff Utility and SSIS – Part 2

In the FIRST post this short series I briefly outlined tablediff utility and set the stage (databases, objects, directories and batch file) for the SSIS package responsible for data synchronization workflow. In this post I will continue with the solution development and show you how to build and configure the package to take advantage of the tablediff tool and merge data across the 2 databases and their objects deployed as per PART 1 prelude. The complete package can be downloaded from my SkyDrive folder HERE if you wish to use it as a template – just make sure you adjust all necessary parameters e.g. database connection details etc. to correspond to your environment. I have also attached a short video footage depicting the package execution and the outcome on the tables’ data synchronization and SQL files management at the end of this post.

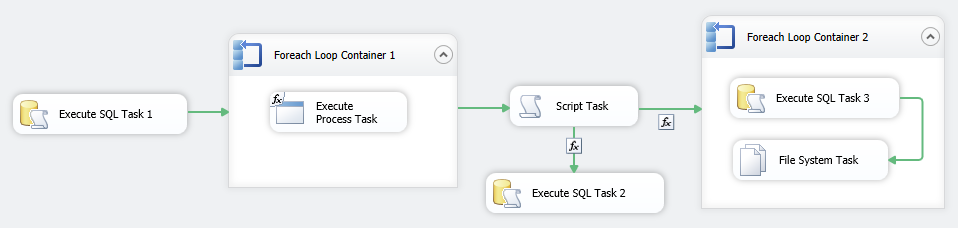

The complete package looks as per image below, with the core functionality encapsulated in the first two sections. The remaining Script Task and the second ForEach Loop Container are there to provide additional features i.e. the Script Task checking whether the file(s) have been created in the nominated directories and the second ForEach Loop Container to execute scripts generated by the tablediff utility and archive the files processed into a separate directory.

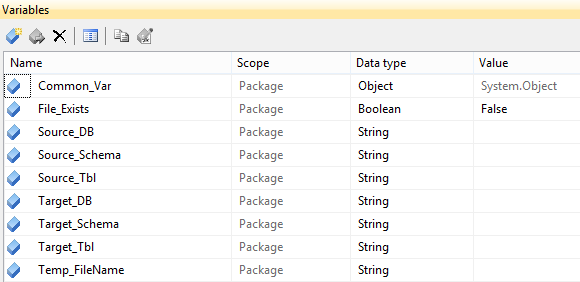

Let’s start with creating a bunch of variables which will house the data temporarily used during package runtime or provide value-dependant execution topology. Most of the variables are pretty self-explanatory with names reflecting their function. Common_Var variable of the System.Object type will house the results of the query against the Params_details table (see previous post HERE) whereas the Boolean File_Exists variable is populated by the Script Task and its value determines execution pathway depending on whether the file(s) have been created or not. Finally, Temp_FileName is used by the second ForEach Loop Container in iterating through SQL files created.

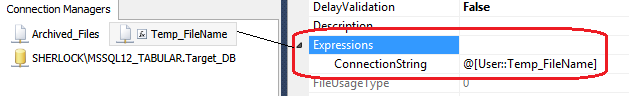

Looking at the Connection Manager, there is one database connection pointing to the Source_DB database and 2 file connections – Archived_Files which points to the archive directory (C:\Diffoutput\Archived_Files) and Temp_FileName one which is using an expression for connection string as per image below.

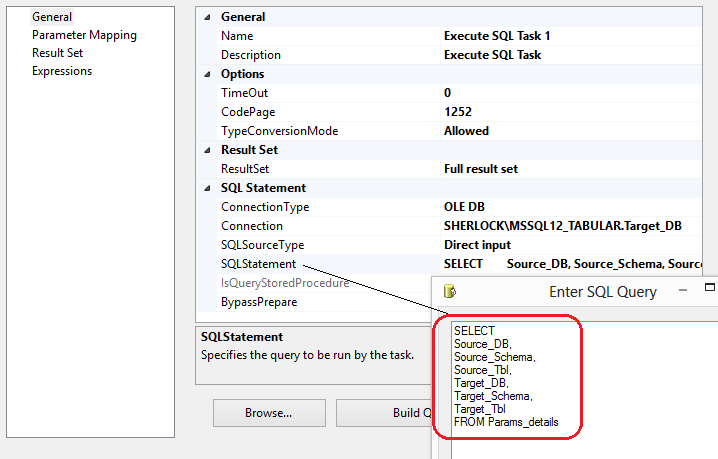

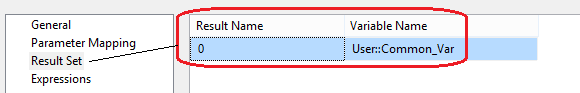

Let’s go through the Control Flow pane now and outline the individual containers roles. Starting with Execute SQL Task 1 transformation, it simply executes the SELECT SQL statement, returning the data as a full results set, which in turn is mapped to the Common_Var variable as per below.

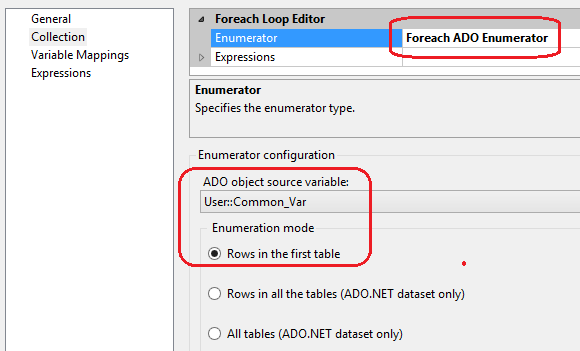

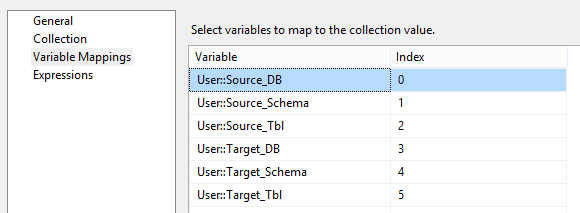

Next, the first ForEach Loop Container is configured to use the ADO object source variable Common_Var and the following variables mappings.

Note that the column order used in the SELECT SQL statement from the first transformation is corresponding to the variables assignment in the first ForEach Loop Container, otherwise the package will fail.

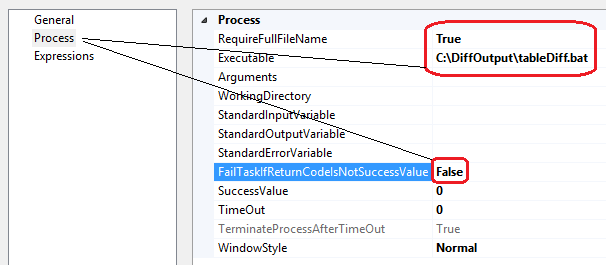

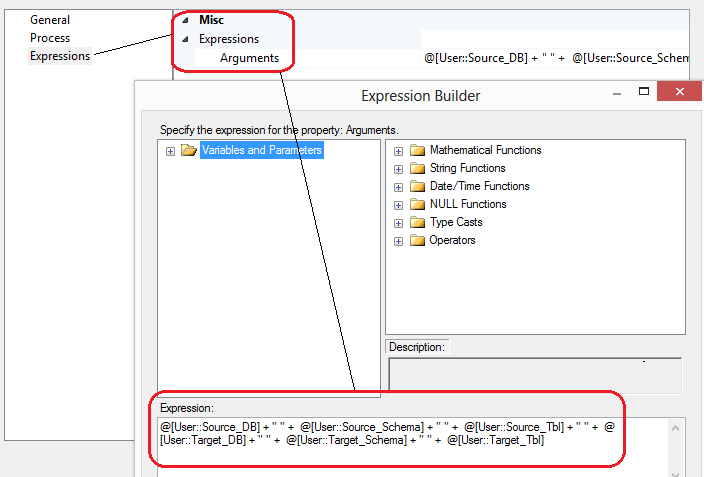

Execute Process Task simply runs the batch file saved in the nominated directory, passing the variables as arguments in a form of an expression as per below. Also, note that the order in which those appear in the expression corresponds to the SELECT SQL statement attributes order and variable mapping in the ForEach Loop Container order.

Notice that the FailTaskIfReturnCodeIsNotSuccessValue property is set to False. This is due to the fact that if no changes are detected, the tablediff will return SucessValue of 1 which effectively fails package execution. As we don’t want to treat this as an error or exception FailTaskIfReturnCodeIsNotSuccessValue property needs to be changed from its default setting to False value.

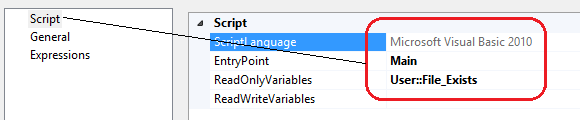

Following on, we have a Script Component with a very simply VB.NET code snippet checking for SQL files existence. I know in the first post I said that no code will be required but this section is not compulsory, uses default SSIS functionality and is only there as an enhancement so you can remove it if you wish. The File_Exists variable is also listed in the ReadOnlyVariables property.

#Region "Imports"

Imports System

Imports System.Data

Imports System.Math

Imports Microsoft.SqlServer.Dts.Runtime

Imports System.IO

#End Region

<Microsoft.SqlServer.Dts.Tasks.ScriptTask.SSISScriptTaskEntryPointAttribute()> _

<System.CLSCompliantAttribute(False)> _

Partial Public Class ScriptMain

Inherits Microsoft.SqlServer.Dts.Tasks.ScriptTask.VSTARTScriptObjectModelBase

Public Sub Main()

Dim directory As DirectoryInfo = New DirectoryInfo("c:\DiffOutput")

Dim file As FileInfo() = directory.GetFiles("*.sql")

If file.Length > 0 Then

Dts.Variables("User::File_Exists").Value = True

Else

Dts.Variables("User::File_Exists").Value = False

End If

Dts.TaskResult = ScriptResults.Success

Dts.TaskResult = ScriptResults.Success

End Sub

#Region "ScriptResults declaration"

Enum ScriptResults

Success = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Success

Failure = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Failure

End Enum

#End Region

End Class

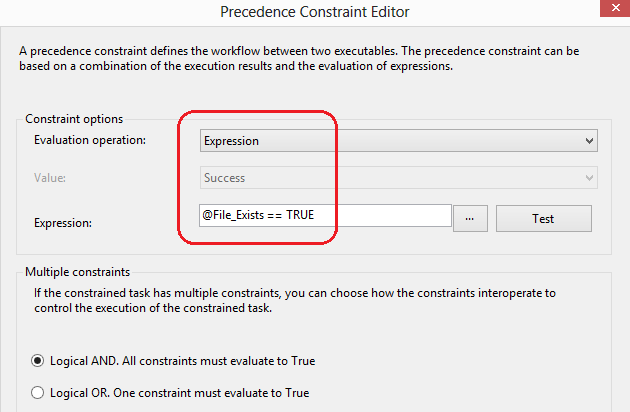

This code also populates File_Exists variable which is being used in the Precedence Constraints joining the subsequent transformations. It contains the conditional expression which when evaluated, returns a Boolean value altering the package execution flow i.e. pointing it in either of the 2 directions – Execute SQL Task 1 or ForEach Loop Container 2. Below is the Precedence Constraint editor view for executing ForEach Loop Container 2 section.

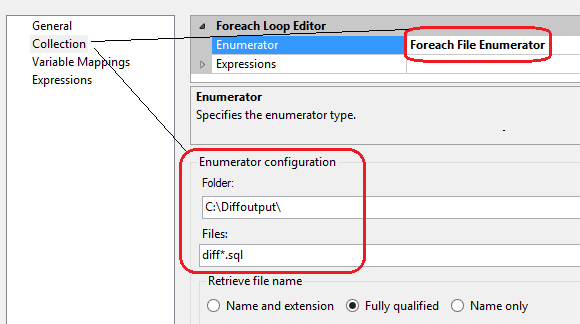

In the last section, ForEach Loop Container 2 iterates through SQL files created using the following enumerator configuration and variable mapping.

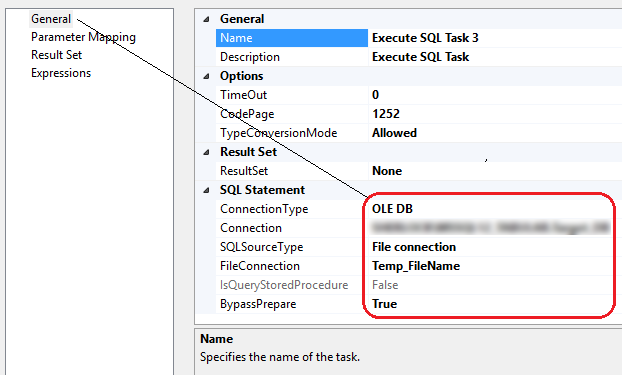

Inside the ForEach Loop Container 2, Execute SQL Task 3 transformation runs the SQL statements saved in the files, with SQL Source Type property configured as a File Connection and Temp_FileName connection selected as a value for File Connection property. File System Task simply archives the processed files into the archive sub-directory as the last step of this package’s execution.

The complete package can be downloaded from my SkyDrive folder HERE so you can use it as a template – just make sure you adjust all necessary parameters e.g. database connection details etc. to correspond to your environment. I have also attached a short video footage depicting the package execution and the outcome on the tables’ data synchronization and SQL files management.

So there you go, a fairly simple and easy to manage data synchronization solution using tablediff utility and Integration Services tool. If you take away the VB.NET code snippet, the whole solution can be build using the default tablediff functionality, pure SSIS and one very simple SELECT SQL statement so if your project can live with the tablediff functionality constraints and you don’t require a great deal of performance, this tool can become a simple and straightforward option for your data synchronization project.

http://scuttle.org/bookmarks.php/pass?action=addThis entry was posted on Wednesday, February 19th, 2014 at 9:56 am and is filed under SQL, SSIS. You can follow any responses to this entry through the RSS 2.0 feed. You can leave a response, or trackback from your own site.