Designing a historised, relational ‘data lake’ – how to speed up data warehouse development and not stress about upfront data modelling

Problem Statement

Data warehouse modelling techniques have largely been left unchanged for decades and most of common methodologies and patterns which were prevalent when I was starting my career in Business Intelligence are still applicable to most projects. In the early days, Inmon created the accepted definition of what a data warehouse is – a subject-oriented, non-volatile, integrated, time-variant collection of data in support of management’s decisions. Inmon approach to enterprise data warehousing assumes that tables are grouped together by subject areas that reflect general data categories (e.g. data on customers, products, finance, etc.). The normalized structure divides data into entities, which creates several tables in a relational database. When applied in large enterprises the result is dozens of tables that are linked together by a web of joins, with departmental data marts created for ease of use. His approach was often criticized for being top-heavy, complex to execute and generally requiring a lot more planning and up-front work. Ralph Kimbal, another prolific figure and the author of Kimbal approach to data warehouse architecture advocated for a simplified approach, where only denormalised data marts were created to satisfy business requirements which carried the benefit of being easier to understand, set-up and develop. However, just as Inmon methodology was often criticized, Kimbal’s approach was not without its faults e.g. data redundancy and maintaining integrity of facts and dimensions can be a challenge. Over the last decade, a third approach to data warehouse modelling started to make inroads. Data vault, a hybrid paradigm that combines the best of 3rd Normal Form (3NF) and dimension modeling, was conceived to address agility, flexibility, and scalability problems found in the other main stream data modelling approaches. It simplifies the data ingestion process, removes the cleansing requirement of a Star Schema, puts the focus on the real problem instead of programming around it and finally, it allows for the addition of new data sources integration without disruption to existing schema. However, as with other two modelling approaches, Data Vault has its fair share of disadvantages and it is not a panacea for all business data needs e.g. increase number of joins, not intended for ad-hoc end user access (including BI tools and OLAP), higher than average number of database and ETL objects etc.

The main issue with data being modelled in a very specific way is that organisations have to undergo a very rigorous and comprehensive scoping exercise to ensure that either of the three modelling methodologies disadvantages will not prohibit them from extracting intelligence out of the data they collect and allow for the most flexible, scalable and long-term support for their analytical platform they provisioning. Data warehouse projects, particularly the enterprise-wide ones, are an expensive and risky endeavour, often taking large amount of resources and years to complete. Even the most data-literate, mature and information management-engaged organizations embarking on the data warehouse built journey often fall prey to constraining themselves to structure their critical data assets in a very prescriptive and rigid way. As a result, once their enterprise data has been structured and formatted according to a specific methodology, it becomes very hard to go back to data’s ‘raw’ state. This often means a compromised and biased view of the business functions which does not fully reflect the answers the business is trying to find. This myopic portrayal of the data, in best case scenarios leads to creating other, disparate and siloed data sources (often by shadow BI/IT or untrusting end-users), whereas in extreme cases, the answers provided are unreliable and untrustworthy.

As such, over the last few years I have observed more and more business taking a completely different approach and abandon or at least minimise data warehouse-specific modelling techniques in favour of maintaining a historised data lake and creating a number of virtual data marts on top of it to satisfy even the most bespoke business requirements. With on-demand cloud computing becoming more prevalent and very powerful hardware able to crunch even copious amounts of raw data in seconds, the need for meticulously designed star or snowflake schema is slowly becoming secondary to how quickly organisations can get access to their data. With in-memory caching, solid state storage and distributed computing, for the first time ever we are witnessing old application design patterns and data modelling techniques becoming the bottleneck for the speed at which data can be processed. Also, with the ever-growing number of data sources to integrate with and data volumes, velocity and variety on the increase, data warehouse modelling paradigms are becoming more of a hindrance, constricting organisations to follow a standard or convention designed decades ago. As a result, what we’re witnessing is the following two scenarios for corporate data management playing out with more frequency:

- Enterprises looking for providing federation capabilities to support Logical Data Warehouse architecture across multiple platforms without having to move data and deal with complexities such as different technologies, formats, schemas, security protocols, locations etc.

- Enterprises looking for consolidating all their data sources in a single place with the speed and agility required to move at a quicker pace, without the constrain of modelling data in a prescriptive, narrow and methodical way as to not throw away the richness of the narrative the raw data may provide long-term

Implementation

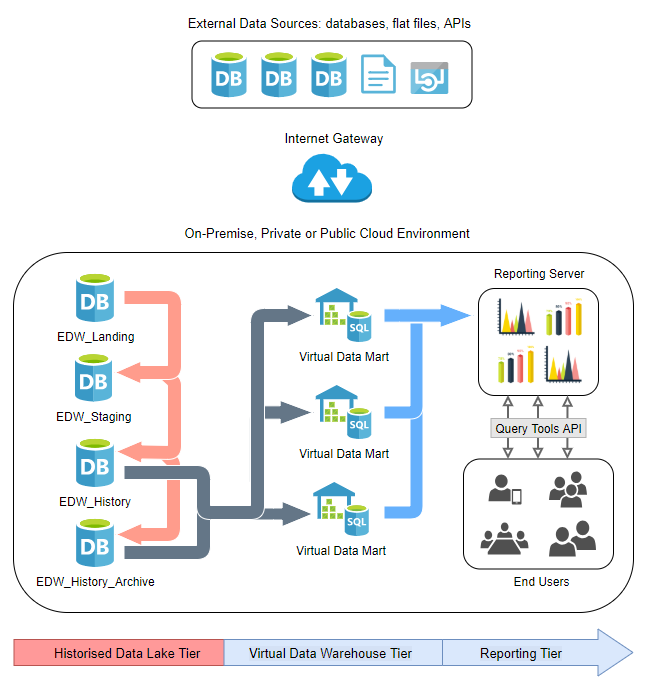

There are many patterns which can facilitate implementing the data lake but in this post we will look at creating four databases which together will handle transient application data (as acquired from the source system), staged data and finally data containing all transitional changes (inserts, deletes and updates) across all history. This design allows for a clever way of persisting all application data changes (one the core requirements of any data warehouse, irrespective of modelling approach taken) along with providing robust foundations for provisioning virtual data marts to satisfy reporting and analytical data needs. The virtual data marts can be easily implemented as views, with the required business rules embedded inside the SQL statements and, if required for performance reasons, materialised or physically persisted on disk or in memory.

The following diagram depicts the high-level architecture used in this scenario and the logical placement of EDW_Landing database used for initial data load, EDW_Staging database used for storing all changes, EDW_History database storing current view of the data and finally EDW_History_Archive storing all history.

To provide a tangible case scenario, let’s create a sample environment with the after mentioned database using Microsoft SQL Server platform. The following script can be used to create the required data stores along with all the objects and dummy data.

/*========================================================================================================

STEP 1

Create EDW_Landing, EDW_Staging, EDW_History, EDW_History_Archive databases on the local instance

========================================================================================================*/

SET NOCOUNT ON;

GO

USE master;

GO

IF EXISTS (SELECT name FROM sys.databases WHERE name = N'EDW_Landing')

BEGIN

-- Close connections to the EDW_Landing database

ALTER DATABASE EDW_Landing SET SINGLE_USER WITH ROLLBACK IMMEDIATE;

DROP DATABASE EDW_Landing;

END;

GO

-- Create SampleDB database and log files

CREATE DATABASE EDW_Landing

ON PRIMARY

(

NAME = N'EDW_Landing',

FILENAME = N'D:\Program Files\Microsoft SQL Server\MSSQL13.HNODWDEV\MSSQL\DATA\EDW_Landing.mdf',

SIZE = 10MB,

MAXSIZE = 1GB,

FILEGROWTH = 10MB

)

LOG ON

(

NAME = N'EDW_Landing_log',

FILENAME = N'D:\Program Files\Microsoft SQL Server\MSSQL13.HNODWDEV\MSSQL\DATA\EDW_Landing_log.ldf',

SIZE = 1MB,

MAXSIZE = 1GB,

FILEGROWTH = 10MB

);

GO

--Assign database ownership to login SA

EXEC EDW_Landing.dbo.sp_changedbowner @loginame = N'SA', @map = false;

GO

--Change the recovery model to BULK_LOGGED

ALTER DATABASE EDW_Landing SET RECOVERY SIMPLE;

GO

IF EXISTS (SELECT name FROM sys.databases WHERE name = N'EDW_Staging')

BEGIN

-- Close connections to the EDW_Staging database

ALTER DATABASE EDW_Staging SET SINGLE_USER WITH ROLLBACK IMMEDIATE;

DROP DATABASE EDW_Staging;

END;

GO

-- Create EDW_Staging database and log files

CREATE DATABASE EDW_Staging

ON PRIMARY

(

NAME = N'EDW_Staging',

FILENAME = N'D:\Program Files\Microsoft SQL Server\MSSQL13.HNODWDEV\MSSQL\DATA\EDW_Staging.mdf',

SIZE = 10MB,

MAXSIZE = 1GB,

FILEGROWTH = 10MB

)

LOG ON

(

NAME = N'EDW_Staging_log',

FILENAME = N'D:\Program Files\Microsoft SQL Server\MSSQL13.HNODWDEV\MSSQL\DATA\EDW_Staging_log.ldf',

SIZE = 1MB,

MAXSIZE = 1GB,

FILEGROWTH = 10MB

);

GO

--Assign database ownership to login SA

EXEC EDW_Staging.dbo.sp_changedbowner @loginame = N'SA', @map = false;

GO

--Change the recovery model to BULK_LOGGED

ALTER DATABASE EDW_Staging SET RECOVERY SIMPLE;

GO

IF EXISTS (SELECT name FROM sys.databases WHERE name = N'EDW_History')

BEGIN

-- Close connections to the EDW_History database

ALTER DATABASE EDW_History SET SINGLE_USER WITH ROLLBACK IMMEDIATE;

DROP DATABASE EDW_History;

END;

GO

-- Create EDW_History database and log files

CREATE DATABASE EDW_History

ON PRIMARY

(

NAME = N'EDW_History',

FILENAME = N'D:\Program Files\Microsoft SQL Server\MSSQL13.HNODWDEV\MSSQL\DATA\EDW_History.mdf',

SIZE = 10MB,

MAXSIZE = 1GB,

FILEGROWTH = 10MB

)

LOG ON

(

NAME = N'EDW_History_log',

FILENAME = N'D:\Program Files\Microsoft SQL Server\MSSQL13.HNODWDEV\MSSQL\DATA\EDW_History_log.ldf',

SIZE = 1MB,

MAXSIZE = 1GB,

FILEGROWTH = 10MB

);

GO

--Assign database ownership to login SA

EXEC EDW_History.dbo.sp_changedbowner @loginame = N'SA', @map = false;

GO

--Change the recovery model to BULK_LOGGED

ALTER DATABASE EDW_History SET RECOVERY SIMPLE;

GO

IF EXISTS

(

SELECT name

FROM sys.databases

WHERE name = N'EDW_History_Archive'

)

BEGIN

-- Close connections to the EDW_History_Archive database

ALTER DATABASE EDW_History_Archive

SET SINGLE_USER

WITH ROLLBACK IMMEDIATE;

DROP DATABASE EDW_History_Archive;

END;

GO

-- Create EDW_History database and log files

CREATE DATABASE EDW_History_Archive

ON PRIMARY

(

NAME = N'EDW_History_Archive',

FILENAME = N'D:\Program Files\Microsoft SQL Server\MSSQL13.HNODWDEV\MSSQL\DATA\EDW_History_Archive.mdf',

SIZE = 10MB,

MAXSIZE = 1GB,

FILEGROWTH = 10MB

)

LOG ON

(

NAME = N'EDW_History_Archive_log',

FILENAME = N'D:\Program Files\Microsoft SQL Server\MSSQL13.HNODWDEV\MSSQL\DATA\EDW_History_Archive_log.ldf',

SIZE = 1MB,

MAXSIZE = 1GB,

FILEGROWTH = 10MB

);

GO

--Assign database ownership to login SA

EXEC EDW_History_Archive.dbo.sp_changedbowner @loginame = N'SA',

@map = false;

GO

--Change the recovery model to BULK_LOGGED

ALTER DATABASE EDW_History_Archive SET RECOVERY SIMPLE;

GO

/*========================================================================================================

STEP 2

Create 'testapp' schema on all 4 databases created in Step 1

========================================================================================================*/

IF OBJECT_ID('tempdb..##dbs ') IS NOT NULL

BEGIN

DROP TABLE ##dbs;

END;

CREATE TABLE ##dbs

(

db VARCHAR(128),

dbid SMALLINT

);

INSERT INTO ##dbs

(

db,

dbid

)

SELECT name,

database_id

FROM sys.databases;

EXEC dbo.sp_MSforeachdb @command1 = 'IF DB_ID(''?'') in (SELECT dbid from ##dbs where db in(''EDW_Landing'', ''EDW_Staging'',''EDW_History'', ''EDW_History_Archive'')) BEGIN USE ? EXEC(''CREATE SCHEMA testapp'') END';

/*========================================================================================================

STEP 3

Create three tables - Table1, Table2 & Table3 across all 4 databases on the testapp schema.

Note: Depending on which database each table is created, its schema may vary slightly.

========================================================================================================*/

USE EDW_Landing;

GO

CREATE TABLE testapp.Table1

(

id INT NOT NULL,

transaction_key UNIQUEIDENTIFIER NOT NULL,

sale_price DECIMAL(10, 2) NOT NULL,

customer_id INT NOT NULL,

sale_datetime DATETIME NOT NULL,

etl_batch_datetime DATETIME2 NOT NULL,

etl_event_datetime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT IDENTITY(1, 1) NOT NULL,

CONSTRAINT pk_testapp_table1_id

PRIMARY KEY CLUSTERED (id ASC)

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON,

ALLOW_PAGE_LOCKS = ON

) ON [PRIMARY]

) ON [PRIMARY];

USE EDW_Landing;

GO

CREATE TABLE testapp.Table2

(

id INT NOT NULL,

transaction_key UNIQUEIDENTIFIER NOT NULL,

sale_price DECIMAL(10, 2) NOT NULL,

customer_id INT NOT NULL,

sale_datetime DATETIME NOT NULL,

etl_Batch_datetime DATETIME2 NOT NULL,

etl_Event_datetime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT IDENTITY(1, 1) NOT NULL,

CONSTRAINT pk_testapp_table2_id

PRIMARY KEY CLUSTERED (id ASC)

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON,

ALLOW_PAGE_LOCKS = ON

) ON [PRIMARY]

) ON [PRIMARY];

USE EDW_Landing;

GO

CREATE TABLE testapp.Table3

(

id INT NOT NULL,

transaction_key UNIQUEIDENTIFIER NOT NULL,

sale_price DECIMAL(10, 2) NOT NULL,

customer_id INT NOT NULL,

sale_datetime DATETIME NOT NULL,

etl_batch_datetime DATETIME2 NOT NULL,

etl_event_dateTime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT IDENTITY(1, 1) NOT NULL,

CONSTRAINT pk_testapp_table3_id

PRIMARY KEY CLUSTERED (id ASC)

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON,

ALLOW_PAGE_LOCKS = ON

) ON [PRIMARY]

) ON [PRIMARY];

USE EDW_Staging;

GO

CREATE TABLE testapp.Table1

(

id INT NULL,

transaction_key UNIQUEIDENTIFIER NULL,

sale_price DECIMAL(10, 2) NULL,

customer_id INT NULL,

sale_datetime DATETIME NULL,

etl_batch_datetime DATETIME2 NOT NULL,

etl_event_datetime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT IDENTITY(1, 1) NOT NULL,

etl_hash_full_record VARCHAR(512)

) ON [PRIMARY];

USE EDW_Staging;

GO

CREATE TABLE testapp.Table2

(

id INT NULL,

transaction_key UNIQUEIDENTIFIER NULL,

sale_price DECIMAL(10, 2) NULL,

customer_id INT NULL,

sale_datetime DATETIME NULL,

etl_batch_datetime DATETIME2 NOT NULL,

etl_event_datetime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT IDENTITY(1, 1) NOT NULL,

etl_hash_full_record VARCHAR(512)

) ON [PRIMARY];

USE EDW_Staging;

GO

CREATE TABLE testapp.Table3

(

id INT NULL,

transaction_key UNIQUEIDENTIFIER NULL,

sale_price DECIMAL(10, 2) NULL,

customer_id INT NULL,

sale_datetime DATETIME NULL,

etl_batch_datetime DATETIME2 NOT NULL,

etl_event_datetime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT IDENTITY(1, 1) NOT NULL,

etl_hash_full_record VARCHAR(512)

) ON [PRIMARY];

USE EDW_History;

GO

CREATE TABLE testapp.Table1

(

hist_testapp_table1_sk CHAR(40) NOT NULL,

id INT NULL,

transaction_key UNIQUEIDENTIFIER NULL,

sale_price DECIMAL(10, 2) NULL,

customer_id INT NULL,

sale_datetime DATETIME NULL,

etl_batch_datetime DATETIME2 NOT NULL,

etl_event_datetime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT NOT NULL,

etl_hash_full_record VARCHAR(512) NOT NULL,

CONSTRAINT pk_hist_testapp_table1

PRIMARY KEY CLUSTERED (

hist_testapp_table1_sk DESC,

etl_event_datetime ASC,

etl_row_id ASC

)

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON,

ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90

) ON [PRIMARY]

) ON [PRIMARY];

GO

USE EDW_History;

GO

CREATE TABLE testapp.Table2

(

hist_testapp_table2_sk CHAR(40) NOT NULL,

id INT NULL,

transaction_key UNIQUEIDENTIFIER NULL,

sale_price DECIMAL(10, 2) NULL,

customer_id INT NULL,

sale_datetime DATETIME NULL,

etl_batch_datetime DATETIME2 NOT NULL,

etl_event_datetime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT NOT NULL,

etl_hash_full_record VARCHAR(512) NOT NULL,

CONSTRAINT pk_hist_testapp_table2

PRIMARY KEY CLUSTERED (

hist_testapp_table2_sk DESC,

etl_event_datetime ASC,

etl_row_id ASC

)

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON,

ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90

) ON [PRIMARY]

) ON [PRIMARY];

GO

USE EDW_History;

GO

CREATE TABLE testapp.Table3

(

hist_testapp_table3_sk CHAR(40) NOT NULL,

id INT NULL,

transaction_key UNIQUEIDENTIFIER NULL,

sale_price DECIMAL(10, 2) NULL,

customer_id INT NULL,

sale_datetime DATETIME NULL,

etl_batch_datetime DATETIME2 NOT NULL,

etl_event_datetime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT NOT NULL,

etl_hash_full_record VARCHAR(512) NOT NULL,

CONSTRAINT pk_hist_testapp_table3

PRIMARY KEY CLUSTERED (

hist_testapp_table3_sk DESC,

etl_event_datetime ASC,

etl_row_id ASC

)

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON,

ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90

) ON [PRIMARY]

) ON [PRIMARY];

GO

USE EDW_History_Archive;

GO

CREATE TABLE testapp.Table1

(

hist_testapp_table1_sk CHAR(40) NOT NULL,

id INT NULL,

transaction_key UNIQUEIDENTIFIER NULL,

sale_price DECIMAL(10, 2) NULL,

customer_id INT NULL,

sale_datetime DATETIME NULL,

etl_batch_datetime DATETIME2 NOT NULL,

etl_event_datetime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT NOT NULL,

etl_hash_full_record VARCHAR(512) NOT NULL,

CONSTRAINT pk_hist_testapp_table1

PRIMARY KEY CLUSTERED (

hist_testapp_table1_sk DESC,

etl_event_datetime ASC,

etl_row_id ASC

)

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON,

ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90

) ON [PRIMARY]

) ON [PRIMARY];

GO

USE EDW_History_Archive;

GO

CREATE TABLE testapp.Table2

(

hist_testapp_table2_sk CHAR(40) NOT NULL,

id INT NULL,

transaction_key UNIQUEIDENTIFIER NULL,

sale_price DECIMAL(10, 2) NULL,

customer_id INT NULL,

sale_datetime DATETIME NULL,

etl_batch_datetime DATETIME2 NOT NULL,

etl_event_datetime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT NOT NULL,

etl_hash_full_record VARCHAR(512) NOT NULL,

CONSTRAINT pk_hist_testapp_table2

PRIMARY KEY CLUSTERED (

hist_testapp_table2_sk DESC,

etl_event_datetime ASC,

etl_row_id ASC

)

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON,

ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90

) ON [PRIMARY]

) ON [PRIMARY];

GO

USE EDW_History_Archive;

GO

CREATE TABLE testapp.Table3

(

hist_testapp_table3_sk CHAR(40) NOT NULL,

id INT NULL,

transaction_key UNIQUEIDENTIFIER NULL,

sale_price DECIMAL(10, 2) NULL,

customer_id INT NULL,

sale_datetime DATETIME NULL,

etl_batch_datetime DATETIME2 NOT NULL,

etl_event_datetime DATETIME2 NOT NULL,

etl_cdc_operation VARCHAR(56) NOT NULL,

etl_row_id INT NOT NULL,

etl_hash_full_record VARCHAR(512) NOT NULL,

CONSTRAINT pk_hist_testapp_table3

PRIMARY KEY CLUSTERED (

hist_testapp_table3_sk DESC,

etl_event_datetime ASC,

etl_row_id ASC

)

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON,

ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90

) ON [PRIMARY]

) ON [PRIMARY];

GO

/*========================================================================================================

STEP 4

Seed EDW_Landing database with dummy data

========================================================================================================*/

USE EDW_Landing;

GO

DECLARE @dt DATETIME = SYSDATETIME();

DECLARE @ct INT;

SET @ct = 0;

WHILE @ct < 1000

BEGIN

INSERT INTO testapp.Table1

(

id,

transaction_key,

sale_price,

customer_id,

sale_datetime,

etl_batch_datetime,

etl_event_datetime,

etl_cdc_operation

)

SELECT @ct + 1,

CONVERT(VARCHAR(255), NEWID()),

ROUND(RAND(CHECKSUM(NEWID())) * (100), 2),

CAST(RAND() * 1000000 AS INT),

DATEADD(DAY, (ABS(CHECKSUM(NEWID())) % 3650) * -1, GETDATE()),

@dt,

SYSDATETIME(),

'Insert';

INSERT INTO testapp.Table2

(

id,

transaction_key,

sale_price,

customer_id,

sale_datetime,

etl_batch_datetime,

etl_event_datetime,

etl_cdc_operation

)

SELECT @ct + 1,

CONVERT(VARCHAR(255), NEWID()),

ROUND(RAND(CHECKSUM(NEWID())) * (100), 2),

CAST(RAND() * 1000000 AS INT),

DATEADD(DAY, (ABS(CHECKSUM(NEWID())) % 3650) * -1, GETDATE()),

@dt,

SYSDATETIME(),

'Insert';

INSERT INTO testapp.Table3

(

id,

transaction_key,

sale_price,

customer_id,

sale_datetime,

etl_batch_datetime,

etl_event_datetime,

etl_cdc_operation

)

SELECT @ct + 1,

CONVERT(VARCHAR(255), NEWID()),

ROUND(RAND(CHECKSUM(NEWID())) * (100), 2),

CAST(RAND() * 1000000 AS INT),

DATEADD(DAY, (ABS(CHECKSUM(NEWID())) % 3650) * -1, GETDATE()),

@dt,

SYSDATETIME(),

'Insert';

SET @ct = @ct + 1;

END;

SELECT t.TABLE_CATALOG,

t.TABLE_SCHEMA,

t.TABLE_NAME,

c.COLUMN_NAME,

c.IS_NULLABLE,

c.DATA_TYPE,

c.ORDINAL_POSITION,

c.CHARACTER_MAXIMUM_LENGTH

FROM EDW_Landing.INFORMATION_SCHEMA.TABLES t

JOIN EDW_Landing.INFORMATION_SCHEMA.COLUMNS c

ON t.TABLE_SCHEMA = c.TABLE_SCHEMA

AND t.TABLE_CATALOG = c.TABLE_CATALOG

AND t.TABLE_NAME = c.TABLE_NAME

WHERE c.TABLE_NAME = 'Table1';

SELECT TOP 5

*

FROM EDW_Landing.testapp.Table1;

Once executed (allowing for any environment adjustments on your side), all four databases should be present on the instance and three tables containing dummy data created on each of those. I will not go over the source to target data acquisition part as I have already covered a few different methods and frameworks which may be used for this purpose, mainly HERE and HERE. Therefore, assuming our data has already been copied across in its raw form into EDW_Landing database, let’s focus on how to we can utilise this framework to reconcile row-level changes across all tables using this methodology.

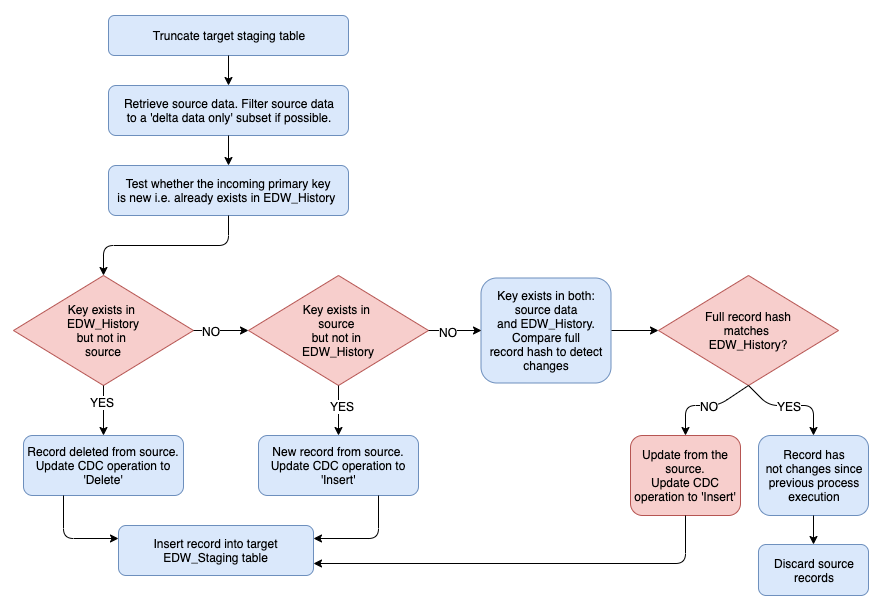

This architecture can be used across many different scenarios but it is most applicable in cases where the source table does not have a reliable method to determine which records have undergone changes since the previous execution of this process, therefore making it the Data Warehouse responsibility to derive the delta. By design, this architecture accomplishes this by comparing the current source data set with the previous snapshot as maintained in the Data Lake by executing full outer join between the source data set and the equivalent copy of the data in the Data Lake (EDW_History database). Any deleted records i.e. keys which exists in EDW_History tables but do not exists in the source will be merged into the EDW_Staging dataset with the CDC operation field value set to ‘D’ (delete). The following diagram depicts the pattern used to derive all changes across source and target data sets based on hashed keys comparison (click on image to enlarge).

What is important to notice is the way record-level comparison is accomplished. On larger datasets e.g. over 100 million rows, comparing each value in each column across all records would yield significant performance issues. To speed up values matching across source and target data sets, a hash key is computed, concatenating all values in the tuple and hashing those to a single block of bytes using HASHBYTES() SQL Server function. Comparing hashed values in a single column (especially when indexed) makes for an easy and flexible way of comparing large datasets quickly. The following code depicts the actual implementation of this architecture, comparing EDW_Landing data with the EDW_History data and staging changed data in EDW_Staging database based on the environment and data created by the previous script.

;WITH lnd

AS (SELECT id,

transaction_key,

sale_price,

customer_id,

sale_datetime,

etl_batch_datetime,

CAST(ROW_NUMBER() OVER (PARTITION BY id ORDER BY etl_event_datetime, etl_row_id) AS INT) AS source_row_number,

CONVERT(

VARCHAR(40),

HASHBYTES(

'SHA1',

UPPER(ISNULL(RTRIM(CONVERT(VARCHAR(200), transaction_key)), 'NA') + '|'

+ ISNULL(RTRIM(CONVERT(VARCHAR(20), sale_price)), 'NA') + '|'

+ ISNULL(RTRIM(CONVERT(NVARCHAR(100), customer_id)), 'NA') + '|'

+ ISNULL(RTRIM(CONVERT(VARCHAR(20), id)), 'NA')

+ ISNULL(RTRIM(CONVERT(VARCHAR(20), etl_cdc_operation)), 'NA') + '|' + '|'

)

),

2

) AS etl_hash_full_record

FROM EDW_Landing.testapp.Table1),

hist

AS (SELECT hist.id,

hist.transaction_key,

hist.sale_price,

hist.customer_id,

hist.sale_datetime,

hist.etl_hash_full_record,

CONVERT(

VARCHAR(40),

HASHBYTES(

'SHA1',

UPPER(ISNULL(RTRIM(CONVERT(VARCHAR(200), hist.transaction_key)), 'NA') + '|'

+ ISNULL(RTRIM(CONVERT(VARCHAR(20), hist.sale_price)), 'NA') + '|'

+ ISNULL(RTRIM(CONVERT(NVARCHAR(100), hist.customer_id)), 'NA')

+ ISNULL(RTRIM(CONVERT(VARCHAR(20), hist.id)), 'NA')

+ ISNULL(RTRIM(CONVERT(VARCHAR(20), 'Delete')), 'NA') + '|' + '|'

)

),

2

) AS etl_hash_full_record_delete

FROM EDW_History.testapp.Table1 hist

JOIN

(

SELECT id,

MAX(etl_event_datetime) AS etl_event_datetime

FROM EDW_History.testapp.Table1

GROUP BY id

) sub

ON hist.id = sub.id

AND hist.etl_event_datetime = sub.etl_event_datetime)

INSERT INTO EDW_Staging.testapp.Table1

(

id,

transaction_key,

sale_price,

customer_id,

sale_datetime,

etl_batch_datetime,

etl_event_datetime,

etl_cdc_operation,

etl_hash_full_record

)

SELECT COALESCE(lnd.id, hist.id),

COALESCE(lnd.transaction_key, hist.transaction_key),

COALESCE(lnd.sale_price, hist.sale_price),

COALESCE(lnd.customer_id, hist.customer_id),

COALESCE(lnd.sale_datetime, hist.sale_datetime),

COALESCE(lnd.etl_batch_datetime, GETDATE()),

GETDATE(),

CASE

WHEN lnd.id IS NOT NULL THEN

'Insert'

WHEN hist.id IS NOT NULL THEN

'Delete'

ELSE

NULL

END,

COALESCE(lnd.etl_hash_full_record, hist.etl_hash_full_record_delete)

FROM lnd

FULL OUTER JOIN hist

ON lnd.id = hist.id

WHERE hist.id IS NULL

OR lnd.id IS NULL

OR hist.etl_hash_full_record <> lnd.etl_hash_full_record;

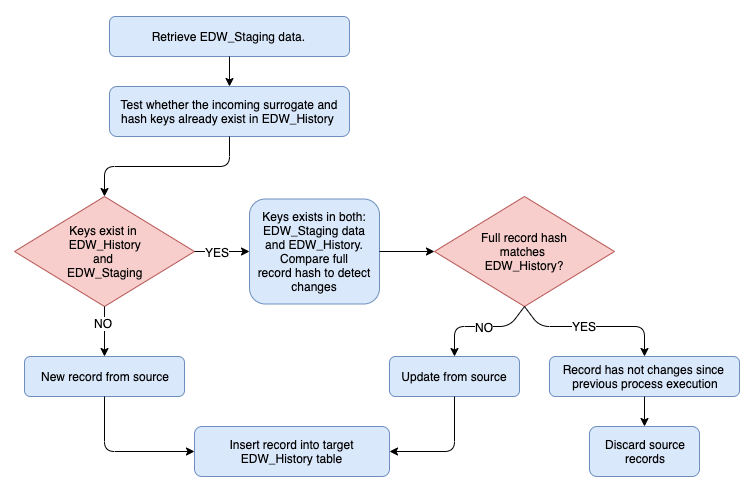

Once newly arrived or changed data has been staged in EDW_Staging database we can move it to EDW_History database. As EDW_Staging database is truncated every time a load is executed, EDW_History is responsible for storing the most current version of the data along with EDW_History_Archive which is used for persisting of all previous versions of the record. As with the previous pattern of loading EDW_Staging database, history is derived based on hash keys comparison for any changed records as well as hash and primary keys comparison for new data. The following diagram depicts the change detection pattern used in populating EDW_History database.

Looking at how this architecture can be achieved using SQL on the dummy data which was created previously, the following script implements historical data loading into EDW_History database.

;WITH stg

AS (SELECT CONVERT(VARCHAR(40), HASHBYTES('SHA1', ISNULL(RTRIM(CONVERT(VARCHAR(MAX), id)), 'NA')), 2) AS stg_testapp_table1_sk,

transaction_key,

sale_price,

customer_id,

sale_datetime,

etl_batch_datetime,

etl_hash_full_record,

etl_event_datetime,

etl_cdc_operation,

id,

CAST(ROW_NUMBER() OVER (PARTITION BY id ORDER BY etl_event_datetime, etl_row_id) AS INT) AS rn

FROM EDW_Staging.testapp.Table1),

hist

AS (SELECT hist.hist_testapp_table1_sk,

hist.etl_event_datetime,

hist.etl_hash_full_record,

hist.etl_row_id

FROM EDW_History.testapp.Table1 hist

INNER JOIN EDW_Staging.testapp.Table1 stg

ON hist.id = stg.id)

INSERT INTO EDW_History.testapp.Table1

(

hist_testapp_table1_sk,

id,

transaction_key,

sale_price,

customer_id,

sale_datetime,

etl_batch_datetime,

etl_event_datetime,

etl_cdc_operation,

etl_hash_full_record,

etl_row_id

)

SELECT stg.stg_testapp_table1_sk,

stg.id,

stg.transaction_key,

stg.sale_price,

stg.customer_id,

stg.sale_datetime,

stg.etl_batch_datetime,

stg.etl_event_datetime,

stg.etl_cdc_operation,

stg.etl_hash_full_record,

stg.rn

FROM stg

LEFT JOIN hist

ON stg.stg_testapp_table1_sk = hist.hist_testapp_table1_sk

AND stg.etl_event_datetime = hist.etl_event_datetime

WHERE hist.hist_testapp_table1_sk IS NULL

OR hist.etl_hash_full_record IS NULL

UNION ALL

SELECT stg.stg_testapp_table1_sk,

stg.id,

stg.transaction_key,

stg.sale_price,

stg.customer_id,

stg.sale_datetime,

stg.etl_batch_datetime,

stg.etl_event_datetime,

stg.etl_cdc_operation,

stg.etl_hash_full_record,

stg.rn

FROM stg

JOIN hist

ON stg.stg_testapp_table1_sk = hist.hist_testapp_table1_sk

AND stg.etl_event_datetime = hist.etl_event_datetime

WHERE stg.etl_hash_full_record <> hist.etl_hash_full_record;

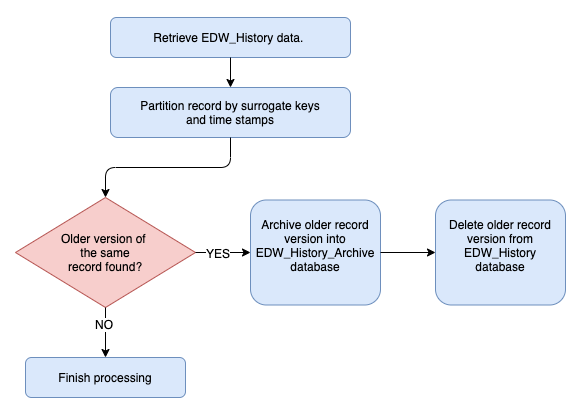

Finally, the last piece of the puzzle is to archive off all historised data into its own dedicated database – EDW_History_Archive – which stores all previous version of the record. Using a dedicated database for this ensures that current version of the data in EDW_History stays lean and is easy to query to get the most up-to-date information without the need to filter data based on dates or flags indicating record currency. EDW_History_Archive is designed to house all previous record versions based on changes introduced.

To achieve this, we will need to do it as a two-stage process i.e. insert data into EDW_History_Archive database and delete any non-current record from EDW_History database. The following diagram and SQL code depicts the logic used for this process.

INSERT INTO EDW_History_Archive.testapp.Table1

(

hist_testapp_table1_sk,

id,

transaction_key,

sale_price,

customer_id,

sale_datetime,

etl_batch_datetime,

etl_event_datetime,

etl_cdc_operation,

etl_hash_full_record,

etl_row_id

)

SELECT hist.hist_testapp_table1_sk,

hist.id,

hist.transaction_key,

hist.sale_price,

hist.customer_id,

hist.sale_datetime,

hist.etl_batch_datetime,

hist.etl_event_datetime,

hist.etl_cdc_operation,

hist.etl_hash_full_record,

hist.etl_row_id

FROM EDW_History.testapp.Table1 hist

INNER JOIN

(

SELECT B.hist_testapp_table1_sk,

B.etl_event_datetime AS max_etl_event_datetime,

B.etl_row_id AS max_etl_row_id,

ROW_NUMBER() OVER (PARTITION BY B.hist_testapp_table1_sk

ORDER BY B.etl_event_datetime DESC,

B.etl_row_id DESC

) AS row_no

FROM EDW_History.testapp.Table1 B

INNER JOIN EDW_Staging.testapp.Table1 STG

ON B.id = STG.id

AND B.etl_event_datetime = STG.etl_event_datetime

AND B.etl_row_id = STG.etl_row_id

) z

ON hist.hist_testapp_table1_sk = z.hist_testapp_table1_sk

AND CONCAT(hist.etl_event_datetime, hist.etl_row_id) <> CONCAT(z.max_etl_event_datetime, z.max_etl_row_id)

AND z.row_no = 1;

DELETE hist

FROM EDW_History.testapp.Table1 hist

INNER JOIN

(

SELECT B.hist_testapp_table1_sk,

B.etl_event_datetime AS max_etl_event_datetime,

B.etl_row_id AS max_etl_row_id,

ROW_NUMBER() OVER (PARTITION BY B.hist_testapp_table1_sk

ORDER BY B.etl_event_datetime DESC,

B.etl_row_id DESC

) AS row_no

FROM EDW_History.testapp.Table1 B

INNER JOIN EDW_Staging.testapp.Table1 stg

ON B.id = stg.id

AND B.etl_event_datetime = stg.etl_event_datetime

AND B.etl_row_id = stg.etl_row_id

) z

ON hist.hist_testapp_table1_sk = z.hist_testapp_table1_sk

AND CONCAT(hist.etl_event_datetime, hist.etl_row_id) <> CONCAT(z.max_etl_event_datetime, z.max_etl_row_id)

AND z.row_no = 1;

In order to merge historical data (EDW_History_Archive) as well as the most current record (EDW_History), we can UNION same objects based on a combination of keys and ids e.g. if we require to expose the full transactional history of a record which has undergone changes (updates, deletes) than we can combine its surrogate key with the business key (in case of Table1 dummy data these are hist_testapp_table1_sk and id) and optionally sort it by etl_batch_datetime to view its lifecycle across the history.

Now that we have the conceptual understanding of how this paradigm can be applied to ‘data lake’ creation, it is worth highlighting that all the scripts depicting the above architecture can be fully managed via the metadata-driven design. Rather than hard-coding all objects’ and attributes’ names, we can build those queries on the fly based on SQL Server metadata stored across its system catalog schema views and tables. As with any data warehouse design robust enough to respond to schema and data changes with little interference from developers, most simple logic following designated patterns should be derived from the information saved in the data store itself. With that approach, we can create a simple looping mechanism to run the metadata-based queries for each stage of the process, for each object being processed. Below is a sample code which builds the query used in populating EDW_Staging database (as per the SQL above) on the fly. We can then take this code and execute it inside a stored procedure or an ETL job without the need of hard-coding anything besides the names of the databases used (not bound to value changes).

IF OBJECT_ID('tempdb..##t_metadata') IS NOT NULL

BEGIN

DROP TABLE ##t_metadata;

END;

CREATE TABLE tempdb..##t_metadata

(

db_name VARCHAR(256),

table_name VARCHAR(256),

column_name VARCHAR(256),

schema_name VARCHAR(128),

ordinal_position INT,

is_nullable BIT,

data_type VARCHAR(256),

character_maximum_length BIGINT,

numeric_scale SMALLINT,

numeric_precision SMALLINT,

is_primary_key BIT,

cast_data_type VARCHAR(256)

);

DECLARE @command VARCHAR(MAX);

SELECT @command

= 'IF DB_ID(''?'') IN

(

SELECT dbid

FROM ##dbs

WHERE db IN ( ''EDW_Landing'',

''EDW_Staging'',

''EDW_History'',

''EDW_History_Archive'')

)

BEGIN

USE ?

EXEC(

''INSERT INTO ##t_metadata

(

db_name,

table_name,

column_name,

schema_name,

ordinal_position,

is_nullable,

data_type,

character_maximum_length,

numeric_scale,

numeric_precision,

is_primary_key,

cast_data_type

)

SELECT

DB_NAME() AS db_name,

t.name AS table_name ,

c.name AS column_name ,

ss.name as schema_name,

c.column_id AS ordinal_position ,

c.is_nullable ,

tp.name AS data_type ,

c.max_length AS character_maximum_length ,

c.scale AS numeric_scale ,

c.precision AS numeric_precision ,

ISNULL(idx.pk_flag,0) as ''''is_primary_key'''',

NULL AS cast_data_type

FROM sys.tables t

JOIN sys.columns c ON t.object_id = c.object_id

JOIN sys.types tp ON c.user_type_id = tp.user_type_id

JOIN sys.objects so ON so.object_id = t.object_id

JOIN sys.schemas ss ON so.schema_id = ss.schema_id

LEFT JOIN

(SELECT i.name as index_name,

i.is_primary_key as pk_flag,

OBJECT_NAME(ic.OBJECT_ID) AS table_name,

s.name AS schema_name,

COL_NAME(ic.OBJECT_ID,ic.column_id) AS column_name

FROM sys.indexes AS i

JOIN sys.index_columns AS ic

ON i.OBJECT_ID = ic.OBJECT_ID

AND i.index_id = ic.index_id

JOIN sys.objects o

ON o.object_id = i.object_id

JOIN sys.schemas s

ON o.schema_id = s.schema_id

WHERE i.is_primary_key = 1) idx

ON idx.table_name = t.name

AND idx.column_name = c.name

AND idx.schema_name = ss.name'')

END';

EXEC sp_MSforeachdb @command;

UPDATE ##t_metadata

SET cast_data_type = CASE

WHEN data_type IN ( 'char', 'varchar' ) THEN

CASE

WHEN character_maximum_length < 0 THEN

'VARCHAR (MAX)'

WHEN character_maximum_length <= 100 THEN

'VARCHAR (100)'

WHEN character_maximum_length > 100

AND character_maximum_length <= 1000 THEN

'VARCHAR (1000)'

WHEN character_maximum_length > 1000

AND character_maximum_length <= 4000 THEN

'VARCHAR (4000)'

WHEN character_maximum_length > 4000

AND character_maximum_length <= 8000 THEN

'VARCHAR (8000)'

ELSE

'VARCHAR (' + CAST(character_maximum_length AS VARCHAR(100)) + ')'

END

WHEN data_type IN ( 'nchar', 'nvarchar' ) THEN

CASE

WHEN character_maximum_length < 0 THEN

'NVARCHAR (MAX)'

WHEN character_maximum_length <= 100 THEN

'NVARCHAR (100)'

WHEN character_maximum_length > 100

AND character_maximum_length <= 1000 THEN

'NVARCHAR (1000)'

WHEN character_maximum_length > 1000

AND character_maximum_length <= 4000 THEN

'NVARCHAR (4000)'

WHEN character_maximum_length > 4000

AND character_maximum_length <= 8000 THEN

'NVARCHAR (8000)'

ELSE

'VARCHAR (' + CAST(character_maximum_length AS VARCHAR(100)) + ')'

END

WHEN data_type IN ( 'smalldatetime', 'datetime', 'datetime2', 'Timestamp' ) THEN

'VARCHAR(4000)'

WHEN data_type IN ( 'int', 'smallint', 'mediumint', 'tinyint', 'bit' ) THEN

'VARCHAR(4000)'

WHEN data_type IN ( 'bigint' ) THEN

'VARCHAR(4000)'

WHEN data_type IN ( 'decimal', 'number', 'float', 'real', 'numeric' ) THEN

'VARCHAR(4000)'

WHEN data_type IN ( 'uniqueidentifier' ) THEN

'VARCHAR(4000)'

WHEN data_type IN ( 'tinyblob' ) THEN

'VARCHAR(4000)'

WHEN data_type IN ( 'date' ) THEN

'VARCHAR(4000)'

WHEN data_type IN ( 'datetimeoffset' ) THEN

'VARCHAR(4000)'

WHEN data_type IN ( 'timestamp' ) THEN

'VARCHAR(4000)'

WHEN data_type IN ( 'long', 'long raw' ) THEN

'VARCHAR (MAX)'

WHEN data_type IN ( 'time' ) THEN

'VARCHAR(4000)'

ELSE

''

END;

IF OBJECT_ID('tempdb..##t_sql') IS NOT NULL

BEGIN

DROP TABLE ##t_sql;

END;

SELECT DISTINCT

t.table_name,

'WITH lnd AS ('

+ REVERSE(STUFF(

REVERSE('SELECT ' + STUFF(

(

SELECT ', ' + '[' + C.column_name + ']'

FROM ##t_metadata AS C

WHERE C.schema_name = t.schema_name

AND C.table_name = t.table_name

AND C.db_name = 'EDW_Landing'

ORDER BY C.ordinal_position

FOR XML PATH('')

),

1,

2,

''

) + ', CAST(ROW_NUMBER() OVER (PARTITION BY ' + a.column_name

+ ' ORDER BY

etl_event_datetime, etl_row_id) AS INT) AS source_row_number,

CONVERT(VARCHAR(40), HASHBYTES(''SHA1'', UPPER('

+ STUFF(

(

SELECT 'ISNULL(RTRIM(LTRIM(CONVERT(' + cast_data_type + ', ' + '[' + column_name

+ ']))), ''NA'') + ''|'' + '

FROM ##t_metadata z

WHERE z.table_name = t.table_name

AND z.db_name = 'EDW_Landing'

ORDER BY z.ordinal_position

FOR XML PATH('')

),

1,

0,

''

)

),

1,

8,

''

)

) + '+''|''+''|'')),2) AS etl_hash_full_record FROM EDW_Landing.' + t.schema_name + '.' + t.table_name

+ '),' AS lnd_query,

'hist AS ('

+ REVERSE(STUFF(

REVERSE('SELECT ' + STUFF(

(

SELECT ', ' + 'hist.[' + C.column_name + ']'

FROM ##t_metadata AS C

WHERE C.schema_name = t.schema_name

AND C.table_name = t.table_name

AND C.db_name = 'EDW_History'

ORDER BY C.ordinal_position

FOR XML PATH('')

),

1,

2,

''

) + ', CONVERT(VARCHAR(40),HASHBYTES(''SHA1'',UPPER('

+ STUFF(

(

SELECT 'ISNULL(RTRIM(LTRIM(CONVERT(' + cast_data_type + ', ' + 'hist.['

+ column_name + ']))), ''NA'') + ''|'' + '

FROM ##t_metadata z

WHERE z.table_name = t.table_name

AND z.db_name = 'EDW_History'

ORDER BY z.ordinal_position

FOR XML PATH('')

),

1,

0,

''

)

),

1,

8,

''

)

) + '+''|''+''|'')),2) AS etl_hash_full_record_delete

FROM EDW_History.' + t.schema_name + '.' + t.table_name

+ ' hist JOIN

(

SELECT id,

MAX(etl_event_datetime) AS etl_event_datetime

FROM EDW_History.' + t.schema_name + '.' + a.table_name

+ '

GROUP BY id

) sub

ON hist.' + a.column_name + ' = sub.' + a.column_name

+ '

AND hist.etl_event_datetime = sub.etl_event_datetime)' AS hist_query,

'INSERT INTO EDW_Staging.' + t.schema_name + '.' + t.table_name + ' ('

+ STUFF(

(

SELECT ', ' + '[' + C.column_name + ']'

FROM ##t_metadata AS C

WHERE C.schema_name = t.schema_name

AND C.table_name = t.table_name

AND C.column_name <> 'etl_row_id'

AND C.db_name = 'EDW_Staging'

ORDER BY C.ordinal_position

FOR XML PATH('')

),

1,

2,

''

) + ')' + ' SELECT '

+ STUFF(

(

SELECT CASE

WHEN C.column_name = 'etl_event_datetime' THEN

', GETDATE()'

WHEN C.column_name = 'etl_batch_datetime' THEN

', COALESCE(l.' + C.column_name + ', GETDATE())'

WHEN C.column_name = 'etl_cdc_operation' THEN

', CASE WHEN l.id IS NOT NULL THEN ''INSERT''

WHEN h.id IS NOT NULL THEN ''DELETE''

ELSE NULL

END'

ELSE

', ' + 'COALESCE(l.[' + C.column_name + '], h.[' + C.column_name + '])'

END

FROM ##t_metadata AS C

WHERE C.schema_name = t.schema_name

AND C.column_name <> 'etl_row_id'

AND C.table_name = t.table_name

AND C.db_name = 'EDW_Staging'

ORDER BY C.ordinal_position

FOR XML PATH('')

),

1,

2,

''

) + +' FROM lnd l

FULL OUTER JOIN hist h ON l.' + a.column_name + ' = h.' + a.column_name + '

WHERE h.' + a.column_name + ' IS NULL

OR l.' + a.column_name + ' IS NULL

OR h.etl_hash_full_record <> l.etl_hash_full_record;' AS insert_query

INTO ##t_sql

FROM ##t_metadata t

CROSS APPLY

(

SELECT DISTINCT

m.table_name,

m.column_name

FROM ##t_metadata m

WHERE m.table_name = t.table_name

AND m.column_name = t.column_name

AND m.is_primary_key = 1

AND m.db_name = 'EDW_Landing'

) a;

SELECT CONCAT(lnd_query, hist_query, insert_query) AS full_sql

FROM ##t_sql

ORDER BY table_name ASC;

Conclusion

So, there you go. A simple and efficient framework for designing and implementing a historised relational ‘data lake’ which can then be built upon to include virtual data marts with business-specific rules and definitions as well as a presentation layer for reporting needs.

In my personal experience as the information architect, the most mundane, exhaustive and repetitive activities I have seen most developers struggling with on any BI/analytics project were building data acquisitions pipelines and managing data’s history. Likewise, for most business trying to uplift their analytics capability through running data-related projects, those two tasks provide very little tangible value. By providing access to the integrated and historised data which any business can utilize to answer pressing questions and improve their competitive advantage in little time and with high accuracy, the need for meticulous, domain-specific modelling is diminished and users can start turning data into information with greater speed and efficiency.

It is also worth pointing out that I am not against data modelling in general as all the previously mentioned techniques and paradigms have greatly contributed to reducing risks, increasing performance and defining business processes through a set of concepts and rules tightly coupled to the models which reflect them. However, oftentimes, when organisations struggle with time-to-market and scope creep based on data complexities and the lack of structural independence and agility, it is worth exploring alternative approaches which may deliver 20% of functionality, yet providing 80% of value.

http://scuttle.org/bookmarks.php/pass?action=addThis entry was posted on Wednesday, February 6th, 2019 at 5:57 am and is filed under Data Modelling, SQL, SQL Server. You can follow any responses to this entry through the RSS 2.0 feed. You can leave a response, or trackback from your own site.

Ravi Agarwal December 2nd, 2019 at 8:07 am

Nice post.

I’m just getting into dimensional modelling ATM but with my department’s data being small, it probably does not make sense….you can just throw a materialized view over the relational schema. Being able to maintain history is a nice touch though and something that is a bit of a pain to develop using traditional dimensional modelling concepts. It’s the best of both worlds.

R