October 27th, 2017 / 2 Comments » / by admin

Tableau comes with a plethora of functionality out of the box but sometimes the following will inevitably be asked/proposed to meet business reporting requirements:

- End users will ask for features which do not exist (at least until version X is released or comes out of preview)

- In order to meet those bespoke demands, some level of custom development i.e. ‘hacking the status quo’ will be required to meet those requirements

In case of Tableau automated reports generation, where pagination and more comprehensive controls are required e.g. producing the same report as a series of PDF documents based on report filter value, the software falls short of providing this level of control. However, given the fact that I am not aware of any other application which provides such fine level of functionality (it’s always a fine balance between features selection and usability) and Tableau’s ability to allow users to dive ‘under the hood’ to breech such gaps, for technically savvy analysts it’s fairly easy to accommodate those demands with a little bit reverse-engineering.

Tableau server allows end users to query its internal workgroup database sitting atop of PostgreSQL DBMS. I have previously written about PostgreSQL to Microsoft SQL Server schema and data synchronisation HERE so I will not go into details of how Tableau stores its metadata but it’s fair to say that anyone who’s ever worked with a relational data stores should have no trouble cobbling together a simple query together to acquire the details on workbooks, views, jobs etc. that Tableau database stores and manages.

Tableau server also allows users to administer some tasks through its built-in tabcmd command-line utility e.g. creating and deleting users, exporting views or workbooks, publishing data sources etc. It’s a handy tool which can simplify administrative efforts and in this specific example we will be using it for creating report exports in an automated fashion.

In order to demo this solution on a typical business-like data, let’s create a sample data set and a very rudimentary Tableau report. The following SQL builds a single table database running on a local Microsoft SQL Server platform and populates it with dummy sales data.

/*==============================================================================

STEP 1

Create SampleDB databases on the local instance

==============================================================================*/

USE [master];

GO

IF EXISTS (SELECT name FROM sys.databases WHERE name = N'SampleDB')

BEGIN

-- Close connections to the StagingDB database

ALTER DATABASE SampleDB SET SINGLE_USER WITH ROLLBACK IMMEDIATE;

DROP DATABASE SampleDB;

END;

GO

-- Create SampleDB database and log files

CREATE DATABASE SampleDB

ON PRIMARY

(

NAME = N'SampleDB',

FILENAME = N'C:\Program Files\Microsoft SQL Server\MSSQL13.MSSQL2016DEV\MSSQL\DATA\SampleDB.mdf',

SIZE = 10MB,

MAXSIZE = 1GB,

FILEGROWTH = 10MB

)

LOG ON

(

NAME = N'SampleDB_log',

FILENAME = N'C:\Program Files\Microsoft SQL Server\MSSQL13.MSSQL2016DEV\MSSQL\DATA\SampleDB_log.LDF',

SIZE = 1MB,

MAXSIZE = 1GB,

FILEGROWTH = 10MB

);

GO

--Assign database ownership to login SA

EXEC SampleDB.dbo.sp_changedbowner @loginame = N'SA', @map = false;

GO

--Change the recovery model to BULK_LOGGED

ALTER DATABASE SampleDB SET RECOVERY BULK_LOGGED;

GO

/*======================================================================================

STEP 2

Create sample_data table on SampleDB database and insert 10000 dummy records

imitating mocked up sales transations across random dates, postcodes and SKU numbers

======================================================================================*/

USE SampleDB;

GO

SET NOCOUNT ON;

IF OBJECT_ID('sample_data') IS NOT NULL

BEGIN

DROP TABLE sample_data;

END;

-- Create target object

CREATE TABLE sample_data

(

id INT IDENTITY(1, 1) NOT NULL,

product_sku VARCHAR(512) NOT NULL,

quantity INT NOT NULL,

sales_amount MONEY NOT NULL,

postcode VARCHAR(4) NOT NULL,

state VARCHAR(128) NOT NULL,

order_date DATETIME NOT NULL

);

-- Populate target object with dummy data

DECLARE @postcodestate TABLE

(

id INT IDENTITY(1, 1),

postcode VARCHAR(4),

state VARCHAR(128)

);

INSERT INTO @postcodestate

(

postcode,

state

)

SELECT '2580', 'NSW' UNION ALL SELECT '2618', 'NSW' UNION ALL SELECT '2618', 'NSW' UNION ALL SELECT '2581', 'NSW' UNION ALL

SELECT '2582', 'NSW' UNION ALL SELECT '2580', 'NSW' UNION ALL SELECT '2550', 'NSW' UNION ALL SELECT '2550', 'NSW' UNION ALL

SELECT '2450', 'NSW' UNION ALL SELECT '3350', 'VIC' UNION ALL SELECT '3350', 'VIC' UNION ALL SELECT '3212', 'VIC' UNION ALL

SELECT '3215', 'VIC' UNION ALL SELECT '3880', 'VIC' UNION ALL SELECT '3759', 'VIC' UNION ALL SELECT '3037', 'VIC' UNION ALL

SELECT '3631', 'VIC' UNION ALL SELECT '4006', 'QLD' UNION ALL SELECT '4069', 'QLD' UNION ALL SELECT '4171', 'QLD' UNION ALL

SELECT '4852', 'QLD' UNION ALL SELECT '4852', 'QLD' UNION ALL SELECT '4352', 'QLD' UNION ALL SELECT '4701', 'QLD' UNION ALL

SELECT '4218', 'QLD' UNION ALL SELECT '5095', 'SA' UNION ALL SELECT '5097', 'SA' UNION ALL SELECT '5573', 'SA' UNION ALL

SELECT '5700', 'SA' UNION ALL SELECT '5214', 'SA' UNION ALL SELECT '6209', 'WA' UNION ALL SELECT '6054', 'WA' UNION ALL

SELECT '6068', 'WA' UNION ALL SELECT '6430', 'WA' UNION ALL SELECT '6770', 'WA' UNION ALL SELECT '7054', 'TAS' UNION ALL

SELECT '7253', 'TAS' UNION ALL SELECT '7140', 'TAS' UNION ALL SELECT '7179', 'TAS' UNION ALL SELECT '7109', 'TAS' UNION ALL

SELECT '0870', 'NT' UNION ALL SELECT '0872', 'NT' UNION ALL SELECT '0852', 'NT' UNION ALL SELECT '2617', 'ACT' UNION ALL

SELECT '2617', 'ACT' UNION ALL SELECT '2913', 'ACT' UNION ALL SELECT '2905', 'ACT' UNION ALL SELECT '2903', 'ACT';

DECLARE @allchars VARCHAR(100) = 'ABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789';

DECLARE @max_row_number INT;

SET @max_row_number = 10000;

DECLARE @count INT = 1;

WHILE @count <= @max_row_number

BEGIN

INSERT INTO SampleDB.dbo.sample_data

(

product_sku,

quantity,

sales_amount,

postcode,

state,

order_date

)

SELECT RIGHT(LEFT(@allchars, ABS(BINARY_CHECKSUM(NEWID()) % 35) + 1), 1)

+ RIGHT(LEFT(@allchars, ABS(BINARY_CHECKSUM(NEWID()) % 35) + 1), 1)

+ RIGHT(LEFT(@allchars, ABS(BINARY_CHECKSUM(NEWID()) % 35) + 1), 1)

+ RIGHT(LEFT(@allchars, ABS(BINARY_CHECKSUM(NEWID()) % 35) + 1), 1)

+ RIGHT(LEFT(@allchars, ABS(BINARY_CHECKSUM(NEWID()) % 35) + 1), 1),

CAST(RAND() * 5 + 3 AS INT),

CAST(RAND() * 50 AS INT) / 2.5,

(

SELECT TOP 1 postcode FROM @postcodestate ORDER BY NEWID()

),

(

SELECT TOP 1 state FROM @postcodestate ORDER BY NEWID()

),

GETDATE() - (365 * 3 * RAND() - 365);

SET @count = @count + 1;

END;

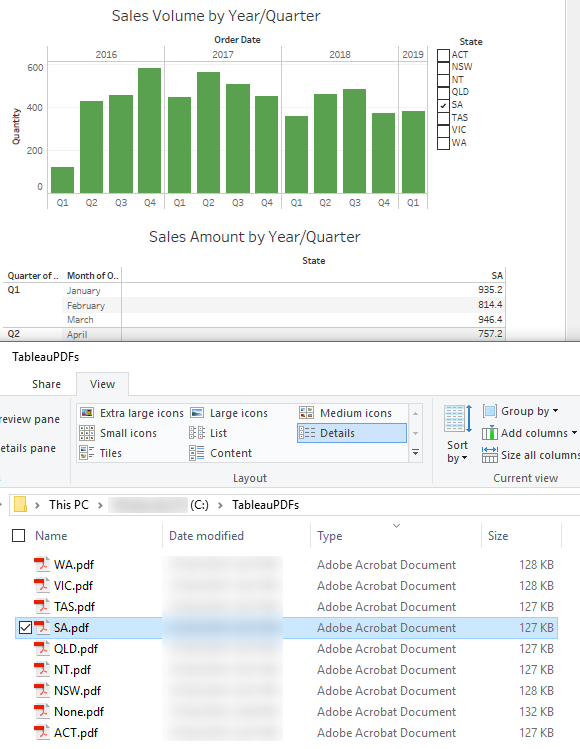

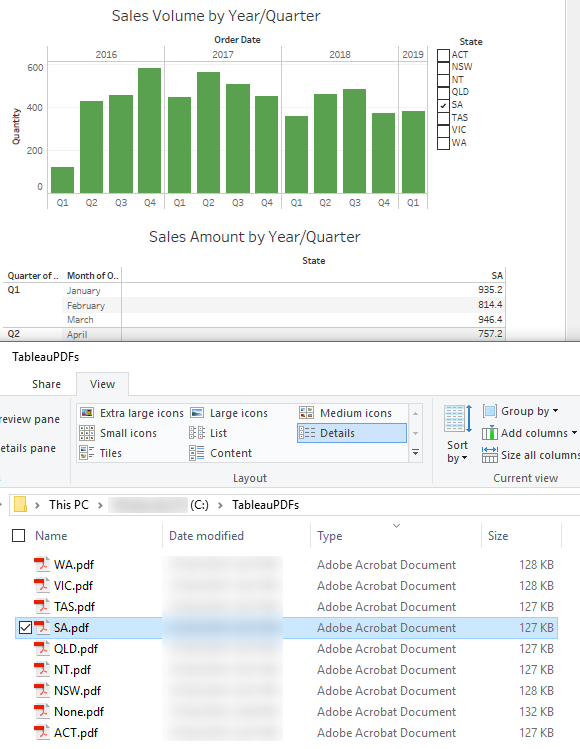

This table will become the basis for the following Tableau dashboard depicting random sales data aggregated by state, postcode, date and product SKU. The sample report looks as per the image below and is located on a Tableau server instance which we will be using to generate the extracts from. You can also notice that the dashboard contains a filter called ‘state’ (as highlighted) with a number of values assigned to it. This filter, along with the filter values representing individual states, will become the basis for producing separate workbook extracts for each of the filter value e.g. NT.pdf, VIC.pdf, NSW.pdf etc.

Next, let’s create a very simple database which will hold necessary metadata used for subsequent reports generation. SQLite is more than adequate for such low volumes of data. The database schema is an amalgamation of Tableau’s own workbook and view tables and other objects defined to store tabcmd options, attributes and filters we’d like to use in this example. The schema is generated and populated by the following Python script. The actual SQL used to define the DDL and DML statements can be found on my OneDrive folder HERE and is required as part of the overall solution.

import configparser

import sqlite3

import os

import sys

import argparse

import psycopg2

config = configparser.ConfigParser()

config.read('params.cfg')

#pg tables selection

pg_tblsNames = ['workbooks', 'views']

#sqlite args

dbsqlite_location = config.get('Sqlite',os.path.normpath('dbsqlite_location'))

dbsqlite_fileName = config.get('Sqlite','dbsqlite_fileName')

dbsqlite_sql = config.get('Sqlite','dbsqlite_sql')

parser = argparse.ArgumentParser(description='Tableau data extraction solution by bicortex.com')

#tableau server args

parser.add_argument('-n','--hostname', help='Tableau postgresql server name', required=True)

parser.add_argument('-d','--dbname', help='Tableau postgresql database name', required=True)

parser.add_argument('-u','--username', help='Tableau postgresql user name', required=True)

parser.add_argument('-p','--passwd', help='Tableau postgresql password', required=True)

args = parser.parse_args()

if not args.hostname or not args.dbname or not args.username or not args.passwd:

parser.print_help()

def run_DB_built(dbsqlite_sql, dbsqlite_location, dbsqlite_fileName, dbname, username, hostname, passwd, *args):

with sqlite3.connect(os.path.join(dbsqlite_location, dbsqlite_fileName)) as sqlLiteConn:

sqlLiteConn.text_factory = lambda x: x.unicode('utf-8', 'ignore')

operations=[]

commands=[]

sql={}

#get all SQL operation types as defined by the '----SQL' prefix in tableau_export.sql file

print('Reading tableau_export.sql file...')

with open(dbsqlite_sql, 'r') as f:

for i in f:

if i.startswith('----'):

i=i.replace('----','')

operations.append(i.rstrip('\n'))

f.close()

#get all SQL DML & DDL statements from tableau_export.sql file

tempCommands=[]

f = open(dbsqlite_sql, 'r').readlines()

for i in f:

tempCommands.append(i)

l = [i for i, s in enumerate(tempCommands) if '----' in s]

l.append((len(tempCommands)))

for first, second in zip(l, l[1:]):

commands.append(''.join(tempCommands[first:second]))

sql=dict(zip(operations, commands))

#run database CREATE SQL

print('Building TableauEX.db database schema... ')

sqlCommands = sql.get('SQL 1: Create DB').split(';')

for c in sqlCommands:

try:

sqlLiteConn.execute(c)

except sqlite3.OperationalError as e:

print (e)

sqlLiteConn.rollback()

sys.exit(1)

else:

sqlLiteConn.commit()

#acquire PostgreSQL workgroup database data and populate SQLite database schema with that data

print('Acquiring Tableau PostgreSQL data and populating SQLite database for the following tables: {0}...'.format(', '.join(map(str, pg_tblsNames))))

pgConn = "dbname={0} user={1} host={2} password={3} port=8060".format(dbname, username, hostname, passwd)

pgConn = psycopg2.connect(pgConn)

pgCursor = pgConn.cursor()

for tbl in pg_tblsNames:

try:

tbl_cols={}

pgCursor.execute("""SELECT ordinal_position, column_name

FROM information_schema.columns

WHERE table_name = '{}'

AND table_schema = 'public'

AND column_name != 'index'

ORDER BY 1 ASC""".format(tbl))

rows = pgCursor.fetchall()

for row in rows:

tbl_cols.update({row[0]:row[1]})

sortd = [tbl_cols[key] for key in sorted(tbl_cols.keys())]

cols = ",".join(sortd)

pgCursor.execute("SELECT {} FROM {}".format(cols,tbl))

rows = pgCursor.fetchall()

num_columns = max(len(rows[0]) for t in rows)

pgsql="INSERT INTO {} ({}) VALUES({})".format(tbl[:-1],cols,",".join('?' * num_columns))

sqlLiteConn.executemany(pgsql,rows)

except psycopg2.Error as e:

print(e)

sys.exit(1)

else:

sqlLiteConn.commit()

#update SQLite bridging tables based on DML statements under 'SQL 2: Update DB' opertation type header

print('Updating SQLite database bridging tables...')

sqlCommands = sql.get('SQL 2: Update DB').split(';')

for c in sqlCommands:

try:

sqlLiteConn.execute(c)

except sqlite3.OperationalError as e:

print (e)

sqlLiteConn.rollback()

sys.exit(1)

else:

sqlLiteConn.commit()

sqlLiteConn.close()

pgConn.close()

if __name__ == "__main__":

run_DB_built(dbsqlite_sql, dbsqlite_location, dbsqlite_fileName, args.dbname, args.username, args.hostname, args.passwd, pg_tblsNames)

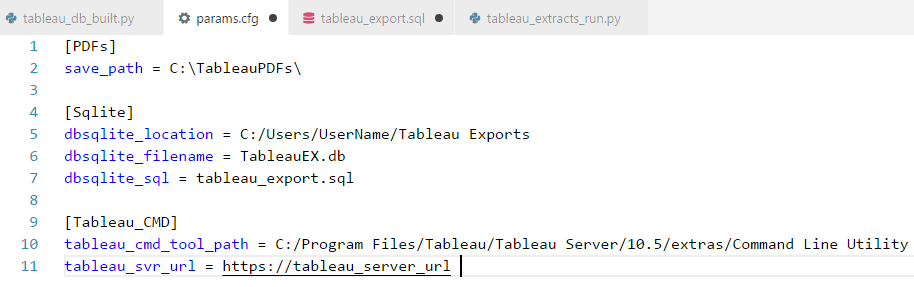

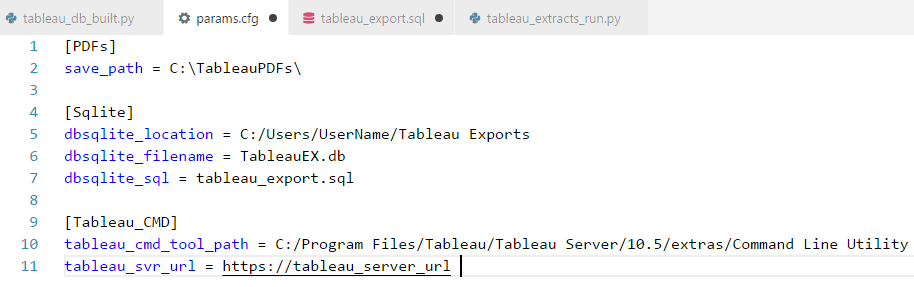

I have run this code against Tableau version 10.5 so there is a slight chance that some SQL statements will require adjustments depending on Tableau’s PostgreSQL database changes in subsequent releases. The script uses the aforementioned SQL file as well as one configuration file where some of the required parameters are stored as per the image below. Please also note that this SQL file contains data for the filter table comprised of Australian states (corresponding to Tableau workbook filter and its values as indicated above) so your actual data may contain other filter names and values or may even not use them at all. Also, Tableau workgroup database schema does not store filter names and/or values so in order to have them referenced in the script they need to be added manually.

Once executed, the following schema should be built and populated.

Now that we have laid down the foundations for this solution and have the SQLite database created and populated as well as a sample Tableau dashboard deployed on Tableau server pointing to our dummy data stored in SQL Server we can run Tableau reports generation script. For this example, let’s assume that we need to generate a separate PDF copy of the dashboard for each of the states defined in the filter table. The query will look as per the following.

SELECT

w.name AS workbook_name,

v.name AS view_name,

f.name AS filter_name,

f.value AS filter_value

FROM workbook w

JOIN view v ON w.id = v.fk_workbook_id

JOIN view_filter_bridge vfb ON v.id = vfb.fk_view_id

JOIN filter f ON vfb.fk_filter_id = f.id

WHERE w.name = 'Sample_Tableau_Report'

AND v.sheettype = 'dashboard'

AND v.state = 'active';

Entering the same query into a Python script, which than further breaks down the returned attributes and their values into tabcmd command arguments in a looped fashion, produces a series of statements which in turn produce PDF version of the nominated dashboard. Below is the Python script which saves a copy of Sales_Overview_Dashboard report in a PDF format for each state to the specified location.

import configparser

import sqlite3

import os

import sys

import argparse

import subprocess

import pandas as pd

config = configparser.ConfigParser()

config.read('params.cfg')

#sqlite & tabcmd args

dbsqlite_location = config.get('Sqlite',os.path.normpath('dbsqlite_location'))

dbsqlite_fileName = config.get('Sqlite','dbsqlite_fileName')

tabcmd_location = config.get('Tableau_CMD','tableau_cmd_tool_path')

tabcmd_url = config.get('Tableau_CMD','tableau_svr_url')

pdfs_location = config.get('PDFs', 'save_path')

parser = argparse.ArgumentParser(description='Tableau report(s) generation script by bicortex.com')

#tableau server args

parser.add_argument('-u', '--username', help='Tableau server user name', required=True)

parser.add_argument('-p', '--passwd', help='Tableau server password', required=True)

parser.add_argument('-o', '--option', help='Other options and arguments provided by tabcmd', required=False)

args = parser.parse_args()

if not args.username or not args.passwd:

parser.print_help()

#tableau login function

def tab_login(tabcmd_location, tabcmd_url, username, passwd):

try:

p=subprocess.run('{0} login -s {1} -u {2} -p {3} -no-certcheck'\

.format(os.path.join(os.path.normpath(tabcmd_location),'tabcmd'),\

tabcmd_url, args.username, args.passwd ),shell=True)

r=p.returncode

return r

except subprocess.SubprocessError as e:

print(e)

sys.exit(1)

#tableau logout function

def tab_logout(tabcmd_location):

try:

p=subprocess.run('{0} logout'.format(os.path.join(os.path.normpath(tabcmd_location),'tabcmd')),shell=True)

except subprocess.SubprocessError as e:

print(e)

sys.exit(1)

#tabcmd report export function

def run_extracts(pdfs_location, tabcmd_location, username=args.username, passwd=args.passwd, option=args.option):

standard_export_options = '--pdf --pagelayout landscape --no-certcheck --timeout 500'

login_ok = tab_login(tabcmd_location, tabcmd_url, username, passwd)

if login_ok==0:

with sqlite3.connect(os.path.join(dbsqlite_location, dbsqlite_fileName)) as sqlLiteConn:

sqliteCursor = sqlLiteConn.cursor()

sqliteCursor.execute( """

SELECT

w.name AS workbook_name,

v.name AS view_name,

f.name AS filter_name,

f.value AS filter_value

FROM workbook w

JOIN view v ON w.id = v.fk_workbook_id

JOIN view_filter_bridge vfb ON v.id = vfb.fk_view_id

JOIN filter f ON vfb.fk_filter_id = f.id

WHERE w.name = 'Sample_Tableau_Report'

AND v.sheettype = 'dashboard'

AND v.state = 'active';

""")

result_set = sqliteCursor.fetchall()

if result_set:

df = pd.DataFrame(result_set)

col_name_list = [tuple[0] for tuple in sqliteCursor.description]

df.columns = col_name_list

print('\nThe following attributes and values were returned from the SQL query:')

print(df)

for row in result_set:

workbook_name = row[0]

view_name = row[1]

filter_name = row[2]

filter_value = row[3]

if filter_name:

if ' ' in row[2]==True:

filter_name = row[2].replace(' ', '%20')

if ' ' in row[2]==True:

filter_value = row[2].replace(' ', '%20')

if not option:

option_value = standard_export_options

command = '{0} export "{1}?{2}={3}" -f "{4}{5}.pdf" {6} '\

.format(os.path.join(os.path.normpath(tabcmd_location),'tabcmd'),\

workbook_name + '/' + view_name, filter_name, filter_value, pdfs_location, filter_value, option_value)

try:

p=subprocess.run(command, shell=True)

except subprocess.SubprocessError as e:

print(e)

sys.exit(1)

else:

if not option:

option_value = standard_export_options

command = '{0} export "{1}" -f "{2}{3}.pdf" {4} '\

.format(os.path.join(os.path.normpath(tabcmd_location),'tabcmd'),\

workbook_name + '/' + view_name, pdfs_location, view_name, option_value)

try:

p=subprocess.run(command, shell=True)

except subprocess.SubprocessError as e:

print(e)

sys.exit(1)

tab_logout(tabcmd_location)

if __name__ == "__main__":

if ' ' in tabcmd_location:

tabcmd_location = '"'+ os.path.normpath(tabcmd_location) + '"'

else:

tabcmd_location = os.path.normpath(tabcmd_location)

run_extracts(pdfs_location, tabcmd_location, args.username, args.passwd)

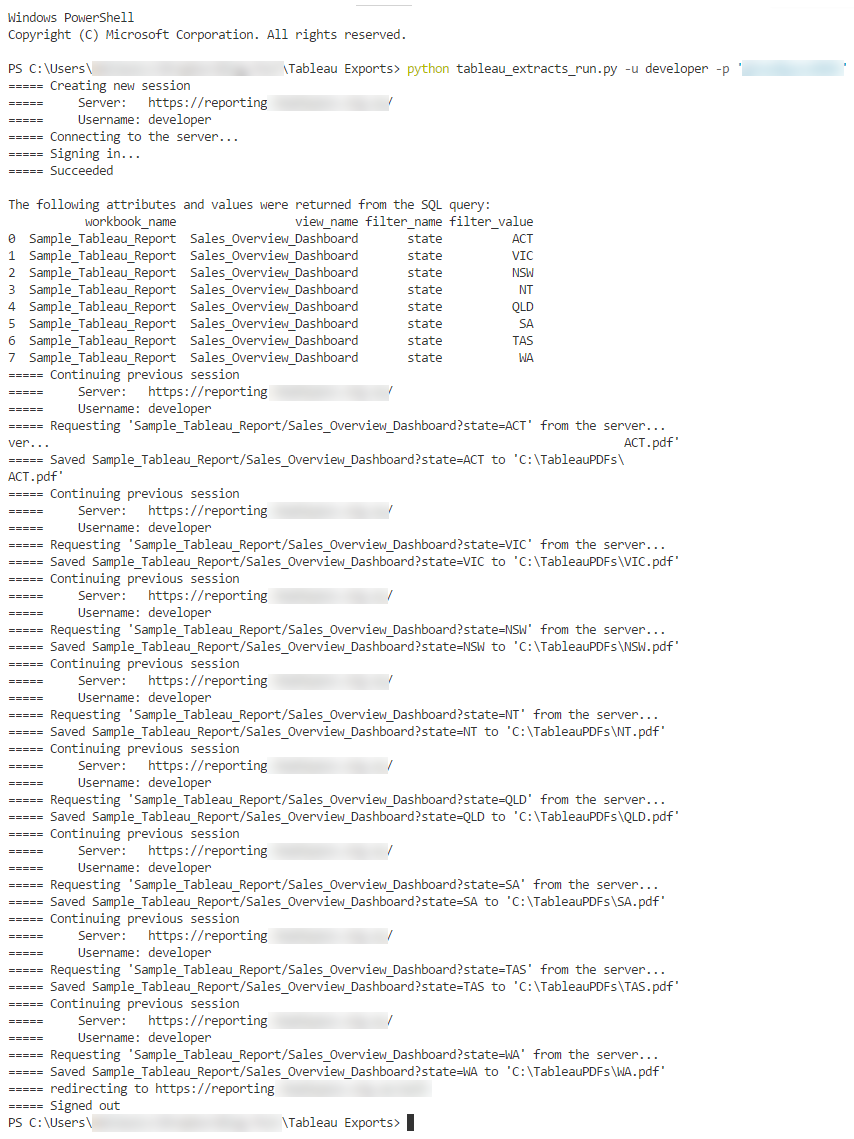

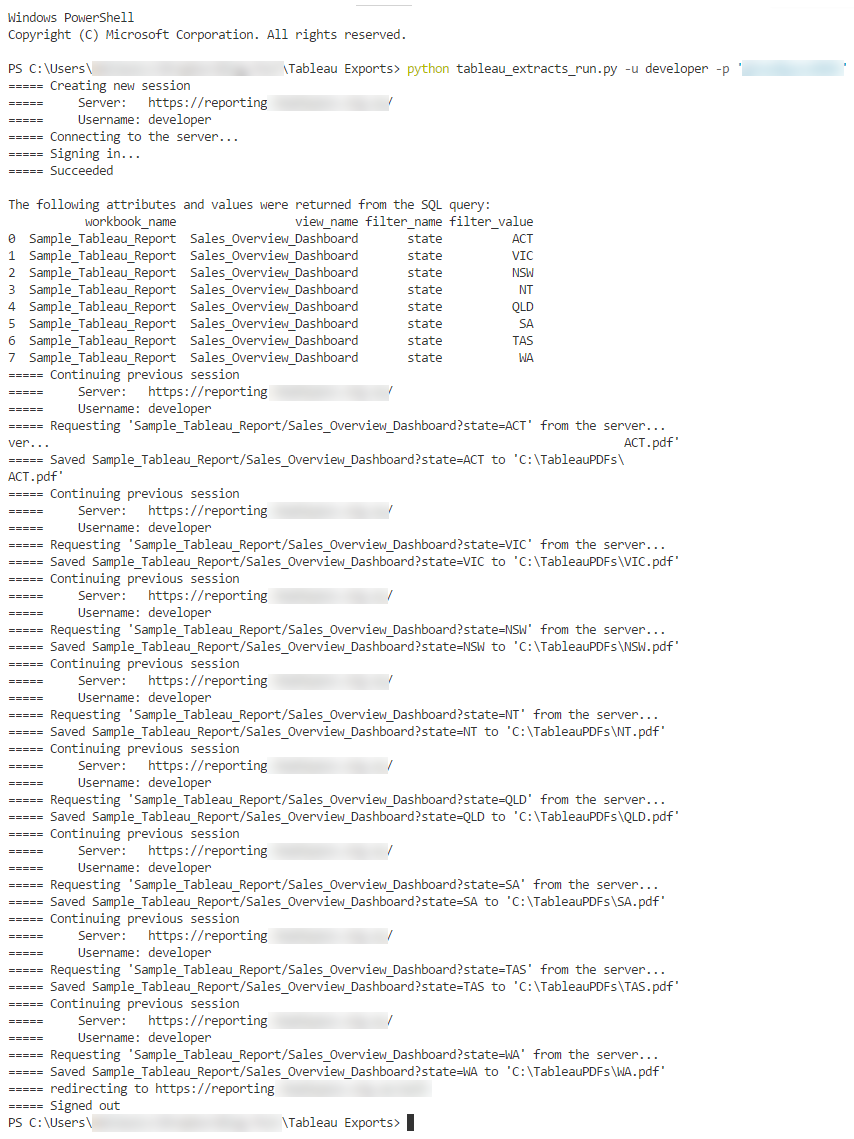

This script assumes that tabcmd utility is installed on the local machine if not run from the Tableau server environment (which has it installed by default) and produces the following output when run from the command prompt (click on image to expand).

The following is the folder view of where the individual reports have been saved as well as a partial view of South Australia state report (SA.pdf) opened depicting SA data only, with remaining states filtered out of the context.

Similar scripts can be run for all states i.e. no filter assign. The difference being the SQL statement we pass in the Python script. For example, if we would like to run Sales Overview Dashboard report across all states on a single PDF sheet we could run the following SQL and embed it into the script.

SELECT

w.name AS workbook_name,

v.name AS view_name,

NULL AS filter_name,

NULL AS filter_value

FROM workbook w

JOIN view v ON w.id = v.fk_workbook_id

WHERE w.name = 'Sample_Tableau_Report'

AND v.sheettype = 'dashboard'

AND v.state = 'active';

This technique can be used across a variety of different combinations and permutations of workbooks, sheets, filters, option etc. and is just a small example of how one can generate a simple solution for an automated workbook extracts. For the full scope of tabcmd commands and associated functionality please refer to Tableau online documentation.

Posted in: Programming, Tableau, Visualisation

Tags: Python, SQL, Tableau

August 23rd, 2017 / 1 Comment » / by admin

Note: Part one to this series can be found HERE

In my last blog post I outlined the concept of creating a simple Python GUI application which utilised Amazon Polly Text-To-Speech cloud API. The premise was quite simple – retrieve chat data stored in SQL Server database and pass it to Polly API to convert it into audible stream using a choice of different male and female voices.

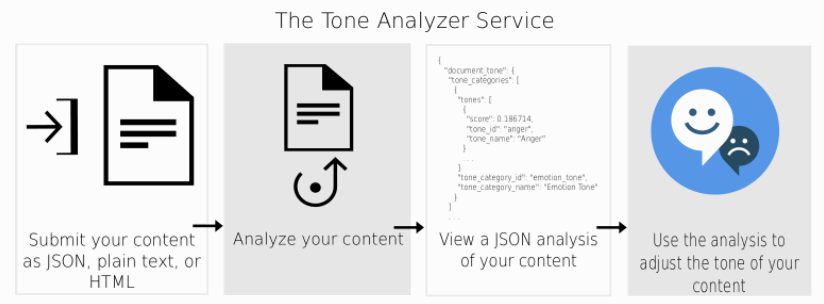

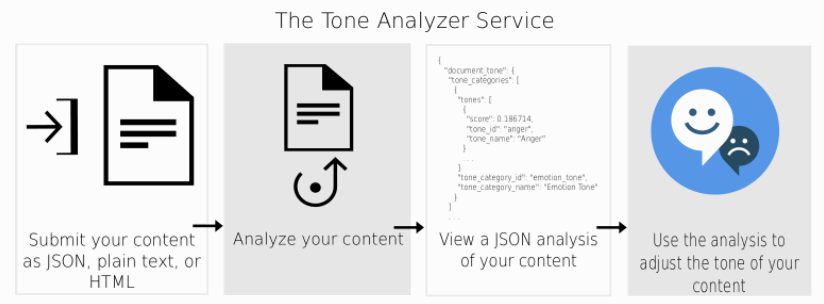

Whilst this functionality provided a good ‘playground’ to showcase one of the multitude of cloud-enabled machine learning applications, I felt that augmenting text-to-voice feature with some visual clues as a representation of the chat content would provide additional value. This is where I thought pairing text-to-speech with linguistic analysis can make this app even more useful and complete. As of today, all major cloud juggernauts offer a plethora general-purpose ML services but when it comes to linguistic analysis which goes beyond sentiment tagging, IBM has risen to become a major player in this arena. IBM Watson Tone Analyser specifically targets understanding emotions and communication style using linguistic analysis to detect emotional, social and language tones in written text. Tones detected within the ‘General Purpose Endpoint’ include joy, fear, sadness, anger, disgust, analytical, confident, tentative, openness, conscientiousness, extraversion, agreeableness, and emotional range. Typical use cases for this service include analysing emotions and tones in what people write online, like tweets or reviews, predicting whether they are happy, sad, confident as well as monitoring customer service and support conversations, personalised marketing and of course chat bots. The following diagram shows the basic flow of calls to the service.

You authenticate to the Tone Analyzer API by providing the username and password that are provided in the service credentials for the service instance that you want to use. The API uses HTTP basic authentication. The request includes several parameters and their respective value options and the simplest way to kick some tires (after completing the sigh-up process) is to use curl tool command and some sample text for analysis e.g.

curl -v -u "username":"password" -H "Content-Type: text/plain" -d "I feel very happy today!"

"https://gateway.watsonplatform.net/tone-analyzer/api/v3/tone?version=2016-05-19"

{

"document_tone": {

"tone_categories": [

{

"tones": [

{

"score": 0.013453,

"tone_id": "anger",

"tone_name": "Anger"

},

{

"score": 0.017433,

"tone_id": "disgust",

"tone_name": "Disgust"

},

{

"score": 0.039234,

"tone_id": "fear",

"tone_name": "Fear"

},

{

"score": 0.857981,

"tone_id": "joy",

"tone_name": "Joy"

},

{

"score": 0.062022,

"tone_id": "sadness",

"tone_name": "Sadness"

}

],

"category_id": "emotion_tone",

"category_name": "Emotion Tone"

},

{

"tones": [

{

"score": 0,

"tone_id": "analytical",

"tone_name": "Analytical"

},

{

"score": "0.849827",

"tone_id": "confident",

"tone_name": "Confident"

},

{

"score": 0,

"tone_id": "tentative",

"tone_name": "Tentative"

}

],

"category_id": "language_tone",

"category_name": "Language Tone"

},

{

"tones": [

{

"score": 0.016275,

"tone_id": "openness_big5",

"tone_name": "Openness"

},

{

"score": 0.262399,

"tone_id": "conscientiousness_big5",

"tone_name": "Conscientiousness"

},

{

"score": 0.435574,

"tone_id": "extraversion_big5",

"tone_name": "Extraversion"

},

{

"score": 0.679046,

"tone_id": "agreeableness_big5",

"tone_name": "Agreeableness"

},

{

"score": 0.092516,

"tone_id": "emotional_range_big5",

"tone_name": "Emotional Range"

}

],

"category_id": "social_tone",

"category_name": "Social Tone"

}

]

}

}

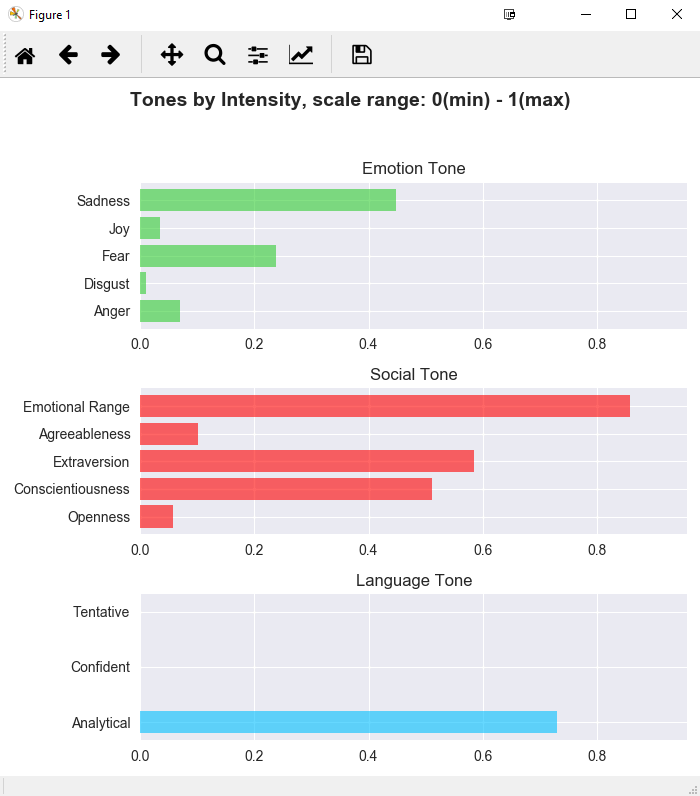

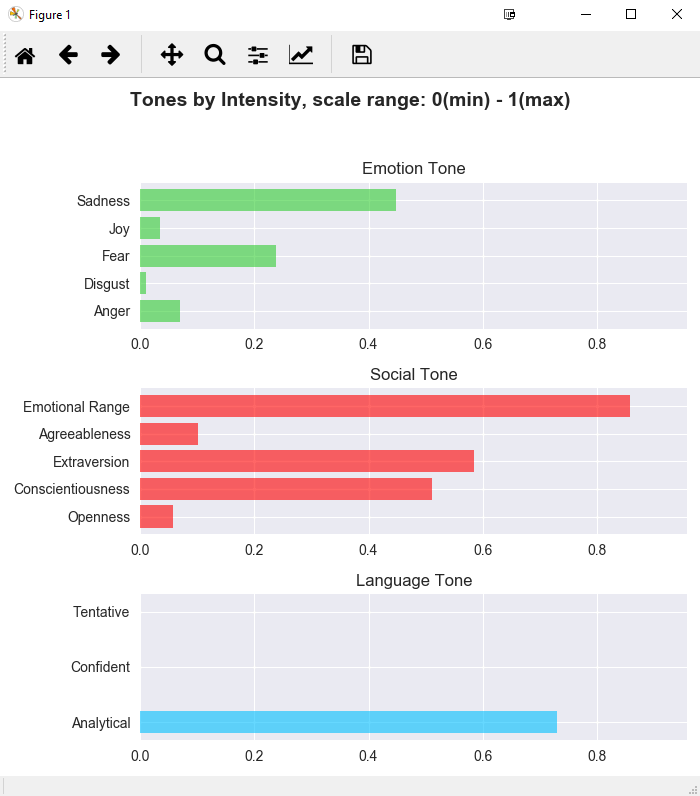

The service returns JSON structure which can be further unpacked and analysed/visualised. Using their SDK and a little bit of Python we can create a little script that will pass the desired text to the Tone Analyser API and return a matplotlib graph chart visualising each tone value within its respective category. Below is a simple visualisation of a paragraph containing text with linguistically-negative sentiment and the Python code generating it.

import matplotlib.pyplot as plt

import numpy as np

import matplotlib as mpl

import watson_developer_cloud as wdc

tone_analyzer = wdc.ToneAnalyzerV3(

version='2016-05-19',

username='username',

password='password',

x_watson_learning_opt_out=True

)

message = 'Hi Team, I know the times are difficult! \

Our sales have been disappointing for the \

past three quarters for our data analytics \

product suite. We have a competitive data \

analytics product suite in the industry. \

But we need to do our job selling it!'

tone=tone_analyzer.tone(message, sentences=False, content_type='text/plain')

#assign each tone name and value to its respective category

emotion_tone={}

language_tone={}

social_tone={}

for cat in tone['document_tone']['tone_categories']:

print('Category:', cat['category_name'])

if cat['category_name'] == 'Emotion Tone':

for tone in cat['tones']:

print('-', tone['tone_name'], tone['score'])

emotion_tone.update({tone['tone_name']:tone['score']})

if cat['category_name'] == 'Social Tone':

for tone in cat['tones']:

print('-', tone['tone_name'], tone['score'])

social_tone.update({tone['tone_name']:tone['score']})

if cat['category_name'] == 'Language Tone':

for tone in cat['tones']:

print('-', tone['tone_name'], tone['score'])

language_tone.update({tone['tone_name']:tone['score']})

#find largest value in all tones to adjust the x scale accordingly

max_tone_value = {**emotion_tone, **language_tone, **social_tone}

if max(max_tone_value.values()) > 0.9:

max_tone_value = 1

else:

max_tone_value = max(max_tone_value.values())+0.1

#plot all tones by category

fig = plt.figure(figsize=(7,7))

mpl.style.use('seaborn')

fig.suptitle('Tones by Intensity, scale range: 0(min) - 1(max)', fontsize=14, fontweight='bold')

x1=fig.add_subplot(311)

y_pos = np.arange(len(emotion_tone.keys()))

plt.barh(y_pos, emotion_tone.values(), align='center', alpha=0.6, color='limegreen')

plt.yticks(y_pos, emotion_tone.keys())

plt.title('Emotion Tone', fontsize=12)

x1.set_xlim([0, max_tone_value])

x2=fig.add_subplot(312)

y_pos = np.arange(len(social_tone.keys()))

plt.barh(y_pos, social_tone.values(), align='center', alpha=0.6,color='red')

plt.yticks(y_pos, social_tone.keys())

plt.title('Social Tone',fontsize=12)

x2.set_xlim([0, max_tone_value])

x3=fig.add_subplot(313)

y_pos = np.arange(len(language_tone.keys()))

plt.barh(y_pos, language_tone.values(), height = 0.4, align='center', alpha=0.6, color='deepskyblue')

plt.yticks(y_pos, language_tone.keys())

plt.title('Language Tone',fontsize=12)

x3.set_xlim([0, max_tone_value])

plt.tight_layout(pad=0.9, w_pad=0.5, h_pad=1.7)

fig.subplots_adjust(top=0.85, left=0.20)

plt.show()

And finally, the amended Python code for the complete application (including AWS Polly integration from Part 1) is as follows:

import sys

import time

import io

from contextlib import closing

import multiprocessing

import pygame

import numpy as np

import pyodbc

import boto3

import watson_developer_cloud as wdc

import tkinter as tk

from tkinter import scrolledtext, ttk, messagebox

import matplotlib as mpl

import matplotlib.pyplot as plt

from matplotlib.backends.backend_tkagg import FigureCanvasTkAgg

class ConnectionInfo:

def __init__(self):

self.use_win_auth = tk.IntVar()

self.inst_srv = tk.StringVar()

self.inst_db = tk.StringVar()

self.inst_login = tk.StringVar()

self.inst_passwd = tk.StringVar()

self.session_id = tk.IntVar()

self.use_aws_api = tk.IntVar(value=1)

self.aws_access_key_id = tk.StringVar()

self.aws_secret_access_key = tk.StringVar()

self.use_ibm_api = tk.IntVar(value=1)

self.ibm_username = tk.StringVar()

self.ibm_passwd = tk.StringVar()

self.clinician_voice = tk.StringVar()

self.patient_voice = tk.StringVar()

self.ibm_version = '2016-05-19'

self.ibm_x_watson_learning_opt_out = True

class MsSqlDatabase:

ODBC_DRIVER = '{ODBC Driver 13 for SQL Server}'

def __init__(self, conn_info):

self.conn_info = conn_info

def connect(self):

connection_string = ('DRIVER={driver};SERVER={server};DATABASE={db};'.format(

driver=self.ODBC_DRIVER,

server=self.conn_info.inst_srv.get(),

db=self.conn_info.inst_db.get()))

if self.conn_info.use_win_auth.get() == 1:

connection_string = connection_string + 'Trusted_Connection=yes;'

else:

connection_string = connection_string + 'UID={uid};PWD={password};'.format(

uid=self.conn_info.inst_login.get(),

password=self.conn_info.inst_passwd.get())

try:

conn = pyodbc.connect(connection_string, timeout=1)

except pyodbc.Error as err:

conn = None

return conn

def get_session(self, conn):

try:

cursor = conn.cursor()

cursor.execute(

"""SELECT UPPER(user_role), message_body FROM dbo.test_dialog t

WHERE t.Session_ID = ? ORDER BY t.ID ASC""", self.conn_info.session_id.get())

results = cursor.fetchall()

except pyodbc.Error as err:

results = None

return results

def get_user_id(self, conn):

try:

cursor = conn.cursor()

cursor.execute(

"""SELECT DISTINCT user_id from dbo.test_dialog t

WHERE t.session_id = ? AND user_role = 'client'""", self.conn_info.session_id.get())

results = cursor.fetchall()

except pyodbc.Error as err:

results = None

return results

def get_messages(self, conn):

try:

cursor = conn.cursor()

cursor.execute(

"""SELECT t.user_role, t.direction, LTRIM(RTRIM(f.RESULT)) AS message FROM dbo.test_dialog t

CROSS APPLY dbo.tvf_getConversations (t.message_body, 50, '.') f WHERE t.session_id = ?

ORDER BY t.id, f.id""", self.conn_info.session_id.get())

results = cursor.fetchall()

except pyodbc.Error as err:

results = None

return results

def get_messages_for_tone_analyse(self, conn):

try:

cursor = conn.cursor()

cursor.execute(

"""DECLARE @message VARCHAR(MAX)

SELECT @message = COALESCE(@message + ' ', '') + message_body

FROM dbo.test_dialog t WHERE t.Session_ID = ? AND user_role = 'client' ORDER BY t.ID ASC

SELECT @message""", self.conn_info.session_id.get())

results = cursor.fetchall()

results = [row[0] for row in results]

try:

messages = ''.join(results)

except TypeError:

messages = None

except pyodbc.Error as err:

messages = None

return messages

class AudioPlayer:

def __init__(self, credentials, voices):

self.credentials = credentials

self.voices = voices

def run(self, messages, voices, commands, status):

status['code'] = 0

status['message'] = 'OK'

try:

polly_service = boto3.client(

'polly',

aws_access_key_id = self.credentials['aws_access_key'],

aws_secret_access_key = self.credentials['aws_secret_key'],

region_name = 'eu-west-1')

except:

polly_service = None

if not polly_service:

status['code'] = 1

status['message'] = 'Cannot connect to AWS Polly service. Please check your API credentials are valid.'

return

is_stopped = False

is_paused = False

pygame.mixer.init(channels=1, frequency=44100)

for message in messages:

print(message)

try:

polly_response = polly_service.synthesize_speech(

OutputFormat='ogg_vorbis',

Text=message[2],

TextType='text',

VoiceId=voices[message[0]])

except:

polly_response = None

if not polly_response:

status['code'] = 2

status['message'] = 'Cannot connect to AWS Polly service. Please check your API credentials are valid.'

break

if "AudioStream" in polly_response:

with closing(polly_response["AudioStream"]) as stream:

data = stream.read()

filelike = io.BytesIO(data)

sound = pygame.mixer.Sound(file=filelike)

sound.play()

while pygame.mixer.get_busy() or is_paused:

if not commands.empty():

command = commands.get()

if command == 'STOP':

sound.stop()

is_stopped = True

break

if command == 'PAUSE':

is_paused = not is_paused

if is_paused:

sound.stop()

else:

sound.play()

time.sleep(0.010)

if is_stopped:

break

class AppFrame(object):

def __init__(self):

self.root = tk.Tk()

self.root.title('Polly Text-To-Speech GUI Prototype ver 1.1')

self.root.resizable(width=False, height=False)

self.conn_info = ConnectionInfo()

self.menubar = self.create_menubar()

self.connection_details_frame = ConnDetailsFrame(self.root, self)

self.session_frame = SessionDetailsFrame(self.root, self)

self.playback_frame = PlaybackDetailsFrame(self.root, self)

self.graph_frame = WatsonGraphDetailsFrame(self.root, self)

def create_menubar(self):

menubar = tk.Menu(self.root)

title_menu = tk.Menu(menubar, tearoff=0)

title_menu.add_command(label='API details...', command=self.on_api_details_select)

title_menu.add_command(label='About...', command=self.on_about_select)

menubar.add_cascade(label='About', menu=title_menu)

self.root.config(menu=menubar)

return menubar

def on_api_details_select(self):

dialog = APIDetailsDialog(self.root)

self.root.wait_window(dialog)

def on_about_select(self):

tk.messagebox.showinfo(title="About", message="Polly Text-To-Speech GUI Prototype ver 1.1")

def run(self):

self.root.mainloop()

class ConnDetailsFrame(ttk.LabelFrame):

def __init__(self, root, parent):

super(ConnDetailsFrame, self).__init__(root, text='1. Connection Details')

super(ConnDetailsFrame, self).grid(

row=0, column=0, columnspan=3, sticky='W',

padx=5, pady=5, ipadx=5, ipady=5

)

self.root = root

self.parent = parent

self.conn_info = parent.conn_info

self.create_notebook()

def create_notebook(self):

self.tab_control = ttk.Notebook(self)

self.create_frames()

self.create_labels()

self.create_entry()

self.create_checkbuttons()

def create_frames(self):

self.tab_db = ttk.Frame(self.tab_control)

self.tab_api = ttk.Frame(self.tab_control)

self.tab_control.add(self.tab_db, text="Database Connection Details ")

self.tab_control.add(self.tab_api, text="APIs Connection Details ")

self.tab_control.grid(row=0, column=0, sticky='E', padx=5, pady=5)

def create_labels(self):

ttk.Label(self.tab_db, text="Server/Instance Name:").grid(row=0, column=0, sticky='E', padx=5, pady=(15, 5))

ttk.Label(self.tab_db, text="Database Name:").grid(row=1, column=0, sticky='E', padx=5, pady=5)

ttk.Label(self.tab_db, text="User Name:").grid(column=0, row=3, sticky="E", padx=5, pady=5)

ttk.Label(self.tab_db, text="Password:").grid(column=0, row=4, sticky="E", padx=5, pady=(5, 10))

ttk.Label(self.tab_api, text="AWS Access Key ID:").grid(column=0, row=1, sticky="E", padx=5, pady=(5, 5))

ttk.Label(self.tab_api, text="AWS Secret Access Key:").grid(column=0, row=2, sticky="E", padx=5, pady=5)

ttk.Label(self.tab_api, text="IBM Watson Username:").grid(column=0, row=4, sticky="E", padx=5, pady=(5, 5))

ttk.Label(self.tab_api, text="IBM Watson Password:").grid(column=0, row=5, sticky="E", padx=5, pady=(5,15))

def create_checkbuttons(self):

check_use_win_auth = ttk.Checkbutton(self.tab_db, onvalue=1, offvalue=0,

variable=self.conn_info.use_win_auth,

text='Use Windows Authentication',

command=self.on_use_win_auth_change)

check_use_win_auth.grid(row=2, column=0, sticky='W', padx=15, pady=(15,5))

check_use_aws_api = ttk.Checkbutton(self.tab_api, onvalue=1, offvalue=0,

variable=self.conn_info.use_aws_api, text='Use AWS Text-To-Speech API')

check_use_aws_api.grid(row=0, column=0, sticky='W', padx=15, pady=(15,5))

check_use_ibm_api = ttk.Checkbutton(self.tab_api, onvalue=1, offvalue=0,

variable=self.conn_info.use_ibm_api,

text='Use IBM Watson API',

command=self.on_use_ibm_api_change)

check_use_ibm_api.grid(row=3, column=0, sticky='W', padx=15, pady=(15,5))

def create_entry(self):

entry_db_server_name = ttk.Entry(self.tab_db, width=60, textvariable=self.conn_info.inst_srv)

entry_db_server_name.grid(row=0, column=1, sticky='W', padx=10, pady=(15, 5))

entry_db_name = ttk.Entry(self.tab_db, width=60, textvariable=self.conn_info.inst_db)

entry_db_name.grid(row=1, column=1, sticky='W', padx=10, pady=5)

self.entry_db_user_name = ttk.Entry(self.tab_db, width=60, textvariable=self.conn_info.inst_login)

self.entry_db_user_name.grid(row=3, column=1, padx=10, pady=5)

self.entry_db_password = ttk.Entry(self.tab_db, width=60, textvariable=self.conn_info.inst_passwd, show="*")

self.entry_db_password.grid(row=4, column=1, padx=10, pady=(5, 10))

entry_aws_access_key = ttk.Entry(self.tab_api, width=60,

textvariable=self.conn_info.aws_access_key_id)

entry_aws_access_key.grid(row=1, column=1, sticky='W', padx=10, pady=(5, 5))

entry_aws_secret_key = ttk.Entry(self.tab_api, width=60,

textvariable=self.conn_info.aws_secret_access_key)

entry_aws_secret_key.grid(row=2, column=1, padx=5, pady=5)

self.entry_ibm_username = ttk.Entry(self.tab_api, width=60,

textvariable=self.conn_info.ibm_username)

self.entry_ibm_username.grid(row=4, column=1, padx=5, pady=5)

self.entry_ibm_password = ttk.Entry(self.tab_api, width=60,

textvariable=self.conn_info.ibm_passwd,show="*")

self.entry_ibm_password.grid(row=5, column=1, padx=5, pady=(5,15))

def on_use_win_auth_change(self):

if (self.conn_info.use_win_auth.get() == 1):

self.entry_db_user_name.configure(state='disabled')

self.entry_db_password.configure(state='disabled')

else:

self.entry_db_user_name.configure(state='normal')

self.entry_db_password.configure(state='normal')

def on_use_ibm_api_change(self):

if (self.conn_info.use_ibm_api.get() == 0):

self.entry_ibm_username.configure(state='disabled')

self.entry_ibm_password.configure(state='disabled')

else:

self.entry_ibm_username.configure(state='normal')

self.entry_ibm_password.configure(state='normal')

class SessionDetailsFrame(ttk.LabelFrame):

def __init__(self, root, parent):

super(SessionDetailsFrame, self).__init__(root, text='2. Session Details')

super(SessionDetailsFrame, self).grid(row=1, column=0, sticky='NW', padx=5, pady=5, ipadx=5, ipady=5, rowspan=2)

self.parent = parent

self.conn_info = parent.conn_info

self.create_entries()

self.create_buttons()

self.create_scrolled_text()

def create_entries(self):

ttk.Entry(

self, justify="center", width=18, font="Helvetica 18 bold",

textvariable=self.conn_info.session_id).grid(row=1, column=2, padx=3, pady=5, sticky='W')

def create_buttons(self):

search_session_btn = ttk.Button(self, text="SEARCH SESSION ID", command=self.on_search_session_click)

search_session_btn.grid(row=1, column=3, ipadx=8, ipady=6)

def create_scrolled_text(self):

self.dialog_st = scrolledtext.ScrolledText(self, width=45, height=13, wrap=tk.WORD)

self.dialog_st.grid(column=2, row=2, padx=4, pady=4, columnspan=2, sticky='w')

style = ttk.Style()

style.configure("TButton", foreground="red")

def on_search_session_click(self):

db = MsSqlDatabase(self.conn_info)

conn = db.connect()

if conn:

results = db.get_session(conn)

if results:

self.dialog_st.delete('1.0', tk.END)

for role, message in results:

self.dialog_st.insert(tk.END, '{}:\n'.format(role), 'role')

self.dialog_st.insert(tk.END, '{}\n\n'.format(message), 'message')

self.dialog_st.tag_config('role', foreground='red', font="Courier 11 bold")

else:

tk.messagebox.showwarning(title="Warning", message="Nominated Session ID not found in the database!")

else:

tk.messagebox.showwarning(title="Warning", message="Cannot connect to database server!")

class PlaybackDetailsFrame(ttk.LabelFrame):

def __init__(self, root, parent):

super(PlaybackDetailsFrame, self).__init__(root, text='3. Playback Details')

super(PlaybackDetailsFrame, self).grid(row=1, column=1, sticky='WN', padx=5, pady=5, ipadx=5, ipady=5)

self.root = root

self.parent = parent

self.conn_info = parent.conn_info

self.create_labels()

self.create_combobox()

self.create_buttons()

root.protocol('WM_DELETE_WINDOW', self.on_closing)

self.process_manager = multiprocessing.Manager()

self.player_process = None

self.player_commands = None

self.player_status = None

def create_labels(self):

l1 = ttk.Label(self, text="Clinician Voice:").grid(row=0, column=0, sticky='W', padx=5, pady=5)

l2 = ttk.Label(self, text="Patient Voice:").grid(row=0, column=1, sticky='W', padx=5, pady=5)

var1 = tk.StringVar(self.root)

var2 = tk.StringVar(self.root)

def create_combobox(self):

clinician = ttk.Combobox(self, width=11, textvariable=self.conn_info.clinician_voice)

clinician.grid(row=1, column=0, padx=5, pady=5, sticky='NW')

clinician['values'] = (

'Russell',

'Nicole',

'Amy',

'Brian',

'Emma',

'Raveena',

'Ivy',

'Joanna',

'Joey',

'Justin',

'Kendra',

'Kimberly',

'Salli'

)

clinician.current(0)

patient = ttk.Combobox(self, width=11, textvariable=self.conn_info.patient_voice)

patient.grid(row=1, column=1, padx=(5, 0), pady=5, sticky='NW')

patient['values'] = (

'Nicole',

'Russell',

'Amy',

'Brian',

'Emma',

'Raveena',

'Ivy',

'Joanna',

'Joey',

'Justin',

'Kendra',

'Kimberly',

'Salli')

patient.current(0)

def create_buttons(self):

play_session_btn = ttk.Button(self, text="PLAY", width=25, command=self.on_play_session_click)

play_session_btn.grid(row=2, column=0, columnspan=2, padx=(10, 2), pady=(20, 5), sticky='WE')

pause_session_btn = ttk.Button(self, text="PAUSE", width=25, command=self.on_pause_session_click)

pause_session_btn.grid(row=3, column=0, columnspan=2, padx=(10, 2), pady=5, sticky='WE')

stop_session_btn = ttk.Button(self, text="STOP", width=25, command=self.on_stop_session_click)

stop_session_btn.grid(row=4, column=0, columnspan=2, padx=(10, 2), pady=(5, 5), sticky='WE')

def on_play_session_click(self):

if self.player_process:

if self.player_process.is_alive():

self.player_commands.put('STOP')

db = MsSqlDatabase(self.conn_info)

db_conn = db.connect()

if db_conn:

messages = db.get_messages(db_conn)

if messages:

is_credentials_valid = True

if len(self.conn_info.aws_access_key_id.get()) == 0 or \

len(self.conn_info.aws_secret_access_key.get()) == 0:

is_credentials_valid = False

if (is_credentials_valid):

credentials = {

'aws_access_key': self.conn_info.aws_access_key_id.get(),

'aws_secret_key': self.conn_info.aws_secret_access_key.get()

}

voices = {

'clinician': self.conn_info.clinician_voice.get(),

'client': self.conn_info.patient_voice.get()

}

player = AudioPlayer(credentials, voices)

self.player_commands = self.process_manager.Queue()

self.player_status = self.process_manager.dict()

self.player_process = multiprocessing.Process(

target=player.run,

args=(messages, voices, self.player_commands, self.player_status))

self.player_process.start()

self.root.after(500, lambda: self.check_player_status(self.player_process, self.player_status))

else:

tk.messagebox.showwarning(title="Warning", message="AWS access or secret key is empty")

else:

tk.messagebox.showwarning(title="Warning", message="Nominated Session ID not found in the database!")

else:

tk.messagebox.showwarning(title="Warning", message="Cannot connect to database server")

def on_pause_session_click(self):

if self.player_commands:

self.player_commands.put("PAUSE")

def on_stop_session_click(self):

if self.player_commands:

self.player_commands.put("STOP")

def on_closing(self):

if self.player_process:

if self.player_process.is_alive():

self.player_commands.put('STOP')

self.player_process.join()

self.root.destroy()

def check_player_status(self, player_process, player_status):

if not player_process.is_alive():

print('Player status: {}, {}'.format(player_status['code'], player_status['message']))

if player_status['code'] != 0:

tk.messagebox.showwarning(title="Warning", message=player_status['message'])

else:

self.root.after(500, lambda: self.check_player_status(player_process, player_status))

class WatsonGraphDetailsFrame(ttk.LabelFrame):

def __init__(self, root, parent):

super(WatsonGraphDetailsFrame, self).__init__(root, text='4. Analysis Graph Details')

super(WatsonGraphDetailsFrame, self).grid(row=2, column=1, sticky='WE', padx=5, pady=5, ipadx=5, ipady=1)

self.root = root

self.parent = parent

self.conn_info = parent.conn_info

self.create_buttons()

def create_buttons(self):

self.tone_analysis_btn = ttk.Button(self, text='PERFORM TONE ANALYSIS', width=28,

command=self.tone_analysis_btn_click)

self.tone_analysis_btn.grid(row=0, column=1, padx=(12, 2), pady=(11, 11), sticky='EW')

def tone_analysis_btn_click(self):

if len(self.conn_info.ibm_username.get()) == 0 or len(self.conn_info.ibm_passwd.get()) == 0 \

or self.conn_info.use_ibm_api.get() == 0:

tk.messagebox.showwarning(title='Warning',

message='\'IBM Watson API\' username or password is empty or disabled')

return

db = MsSqlDatabase(self.conn_info)

conn = db.connect()

if not conn:

tk.messagebox.showwarning(title='Warning', message='Cannot connect to database server!')

return

messages = db.get_messages_for_tone_analyse(conn)

if not messages:

tk.messagebox.showwarning(title='Warning', message='Nominated Session ID not found in the database!')

return

if len(messages.split()) < 3: tk.messagebox.showwarning(title='Warning', message='Too few words provided!') return if sys.getsizeof(messages) > 128000:

tk.messagebox.showwarning(title='Warning', message='The message provided is too long for API string limit.')

return

db_user_id = db.get_user_id(conn)

if not db_user_id:

tk.messagebox.showwarning(title='Warning', message='Cannot get User ID for given Session ID')

return

client = { 'session_id': self.conn_info.session_id.get(),

'user_id': db_user_id[0][0] }

tone_analyzer = wdc.ToneAnalyzerV3(

version=self.conn_info.ibm_version,

username=self.conn_info.ibm_username.get(),

password=self.conn_info.ibm_passwd.get(),

x_watson_learning_opt_out=self.conn_info.ibm_x_watson_learning_opt_out

)

try:

tone = tone_analyzer.tone(messages, sentences=False, content_type='text/plain')

except:

tk.messagebox.showwarning(title='Warning', message='Cannot connect to IBM Watson service')

return

dialog = ToneAnalysisDialog(self, client, tone)

self.wait_window(dialog)

class APIDetailsDialog(tk.Toplevel):

def __init__(self, parent):

super(APIDetailsDialog, self).__init__(parent)

self.parent = parent

self.title('API Details')

self.resizable(width=False, height=False)

frame = ttk.LabelFrame(self, text="Polly Text-To-Speech GUI Prototype API Details")

ttk.Label(frame, text="Text to Speech API:").grid(row=0, column=0, sticky='W')

ttk.Label(frame, text="AWS Polly").grid(row=1, column=0, sticky='W', pady=(0, 10))

ttk.Label(frame, text="Tone Analyser API:").grid(row=2, column=0, sticky='W')

ttk.Label(frame, text="IBM Watson").grid(row=3, column=0, sticky='W')

frame.pack(side=tk.TOP, fill=tk.BOTH, padx=10, pady=10)

close_btn = ttk.Button(self, text='Close', command=self.on_close_btn_click)

close_btn.pack(padx=5, pady=5, side=tk.BOTTOM)

self.update_idletasks()

w = self.winfo_width()

h = self.winfo_height()

x = (self.winfo_screenwidth() - w) // 2

y = (self.winfo_screenheight() - h) // 2

self.geometry('{}x{}+{}+{}'.format(w, h, x, y))

self.grab_set()

def on_close_btn_click(self):

self.destroy()

class ToneAnalysisDialog(tk.Toplevel):

def __init__(self, parent, client, tone):

super(ToneAnalysisDialog, self).__init__(parent)

self.parent = parent

self.client = client

self.tone = tone

self.title('Tone Analysis')

plot_widget = self.create_tone_analyse_plot()

plot_widget.pack(side=tk.TOP, fill=tk.BOTH, expand=1)

close_btn = ttk.Button(self, text='Close', command=self.on_close_btn_click)

close_btn.pack(padx=5, pady=5, side=tk.BOTTOM)

self.grab_set()

def create_tone_analyse_plot(self):

emotion_tone = {}

language_tone = {}

social_tone = {}

for cat in self.tone['document_tone']['tone_categories']:

print('Category:', cat['category_name'])

if cat['category_name'] == 'Emotion Tone':

for tone in cat['tones']:

print('-', tone['tone_name'], tone['score'])

emotion_tone.update({tone['tone_name']: tone['score']})

if cat['category_name'] == 'Social Tone':

for tone in cat['tones']:

print('-', tone['tone_name'], tone['score'])

social_tone.update({tone['tone_name']: tone['score']})

if cat['category_name'] == 'Language Tone':

for tone in cat['tones']:

print('-', tone['tone_name'], tone['score'])

language_tone.update({tone['tone_name']: tone['score']})

max_tone_values = list(emotion_tone.values()) + list(language_tone.values()) + list(social_tone.values())

if max(max_tone_values) > 0.9:

max_tone_value = 1

else:

max_tone_value = max(max_tone_values) + 0.1

mpl.style.use('seaborn')

fig = mpl.figure.Figure(figsize=(7, 7))

canvas = FigureCanvasTkAgg(fig, master=self)

fig.suptitle(

'Tones Analysis of Patient ID \'{}\', Chat Data for Session ID \'{}\'\nScale range: 0 (min) -- 1 (max)'

.format(self.client['user_id'], self.client['session_id']), fontsize=14, fontweight='bold')

keys = sorted(emotion_tone.keys(), reverse=True)

values = [emotion_tone[key] for key in keys]

y_pos = np.arange(len(values))

ax1 = fig.add_subplot(311)

ax1.barh(y_pos, values, align='center', alpha=0.6, color='limegreen')

ax1.set_yticks(y_pos)

ax1.set_yticklabels(keys)

ax1.set_title('Emotion Tone', fontsize=12)

ax1.set_xlim([0, max_tone_value])

keys = sorted(social_tone.keys(), reverse=True)

values = [social_tone[key] for key in keys]

y_pos = np.arange(len(values))

ax2 = fig.add_subplot(312)

ax2.barh(y_pos, values, align='center', alpha=0.6, color='red')

ax2.set_yticks(y_pos)

ax2.set_yticklabels(keys)

ax2.set_title('Social Tone', fontsize=12)

ax2.set_xlim([0, max_tone_value])

keys = sorted(language_tone.keys(), reverse=True)

values = [language_tone[key] for key in keys]

y_pos = np.arange(len(values))

ax3 = fig.add_subplot(313)

ax3.barh(y_pos, values, height=0.4, align='center', alpha=0.6, color='deepskyblue')

ax3.set_yticks(y_pos)

ax3.set_yticklabels(keys)

ax3.set_title('Language Tone', fontsize=12)

ax3.set_xlim([0, max_tone_value])

fig.tight_layout(pad=0.9, w_pad=0.5, h_pad=1.7)

fig.subplots_adjust(top=0.85, left=0.20)

canvas.show()

widget = canvas.get_tk_widget()

return widget

def on_close_btn_click(self):

self.destroy()

if __name__ == "__main__":

app = AppFrame()

app.run()

This concludes this two-part series on building a simple GUI app in Python and Tkinter using AWS and IBM machine learning cloud services. Now you can see that anyone, with a little bit of elbow grease, minimal Python skills and little bit of time to spare (no PhD required!) can take advantage of these machine learning services and create something interesting.

Posted in: Uncategorized

Tags: Analytics, Artificial Intelligence, AWS, Cloud Computing, IBM Watson, Machine Learning, Python, SQL, SQL Server